Capital market applications of neural networks etc

Post on: 14 Ноябрь, 2015 No Comment

23tino

Transcript

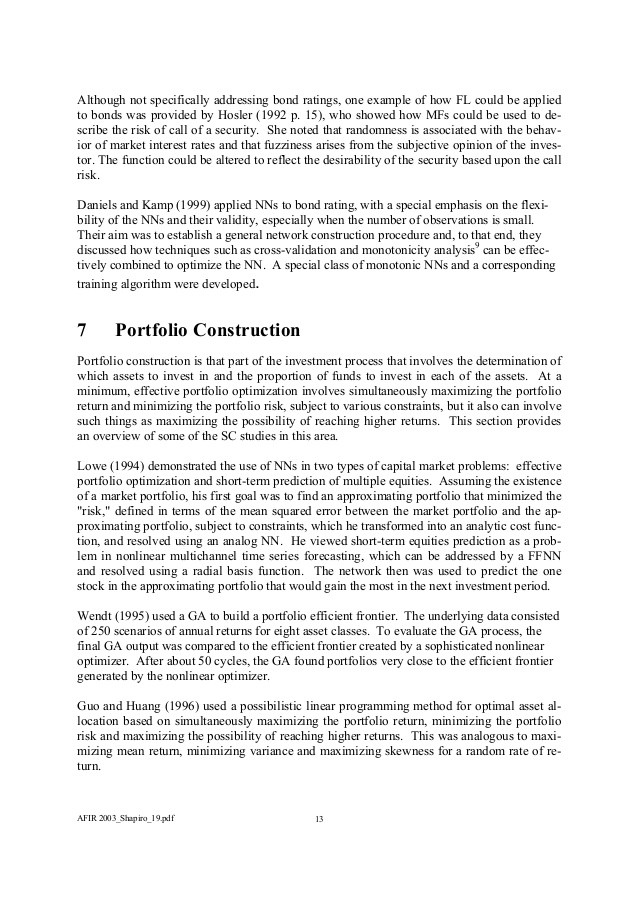

- 1. Capital Market Applications of Neural Networks, Fuzzy Logic and Genetic Algorithms Arnold F. Shapiro Penn State University Smeal College of Business, University Park, PA 16802, USA Phone: 01-814-865-3961, Fax: 01-814-865-6284, E-mail: afs1@psu.eduAbstract: The capital markets have numerous areas with potential applications for neural net-works, fuzzy logic and genetic algorithms. Given this potential and the impetus on thesetechnologies during the last decade, a number of studies have focused on capital market ap-plications. This paper presents an overview of these studies. The specific purposes of thepaper are twofold: first, to review the capital market applications of these technologies so asto document the unique characteristics of capital markets as an application area; and second,to document the extent to which these technologies, and hybrids thereof, have been em-ployed.Keywords: capital markets, applications, neural networks, fuzzy logic, genetic algorithmsAcknowledgments:This work was supported in part by the Robert G. Schwartz Faculty Fellowship. The assis-tance of Asheesh Choudhary, Krishna D. Faldu, Jung Eun Kim, and Laura E. Campbell isgratefully acknowledgedAFIR 2003_Shapiro_19.pdf 1 2. 1 IntroductionNeural networks (NNs) are used for learning and curve fitting, fuzzy logic (FL) is used todeal with imprecision and uncertainty, and genetic algorithms (GAs) are used for search andoptimization. These technologies often are linked together because they are the most com-monly used components of what Zadeh (1992) called soft computing (SC), which he envi-sioned as being “. modes of computing in which precision is traded for tractability, robust-ness and ease of implementation.”The capital markets have numerous areas with potential applications for these SC technolo-gies. Given this potential and the impetus on these technologies during the last decade, anumber of studies have focused on capital market applications and in many cases have dem-onstrated better performance than competing approaches. This paper presents an overview ofthese studies. The specific purposes of the paper are twofold: first, to review the capital mar-ket applications of these SC technologies so as to document the unique characteristics ofcapital markets as an application area; and second, to document the extent to which thesetechnologies, and hybrids thereof, have been employed.The paper has a separate section devoted to each of the capital market areas of market fore-casting, trading rules, option pricing, bond ratings, and portfolio construction. Each sectionbegins with a brief introduction and then SC studies in that application area are reviewed.The studies were drawn from a broad cross-section of the literature and are intended to showwhere each technology has made inroads into the capital market areas. However, since thispaper is still in the development stage, only a representative sample of the literature has beenincluded, so the study should be considered a work in progress. The paper ends with a prog-nosis for the SC technologies.2 Neural Networks, Fuzzy Logic and Genetic Algo- rithmsIt is assumed that readers are generally familiar with the basics of NNs, FL and GAs,1 butthey may not have conceptualized the overall processes associated with these technologies.This section presents an overview of these processes.2.1 Neural Networks (NNs)NNs, first explored by Rosenblatt (1959) and Widrow and Hoff (1960), are computationalstructures with learning and generalization capabilities. Conceptually, they employ a dis-tributive technique to store knowledge acquired by learning with known samples and areused for pattern classification, prediction and analysis, and control and optimization. Opera-tionally, they are software programs that emulate the biological structure of the human brainand its associated neural complex (Bishop, 1995).The NN can be either supervised or unsupervised. The distinguishing feature of a supervisedNN is that its input and output are known and its objective is to discover a relationship be-tween the two. The distinguishing feature of an unsupervised NN is that only the input isAFIR 2003_Shapiro_19.pdf 2 3. known and the goal is to uncover patterns in the features of the input data. The remainder ofthis subsection is devoted to an overview of supervised and unsupervised NNs, as processes.2.1.1 Supervised NNsA sketch of the operation of a supervised NN is shown in Figure 1. Figure 1: The Operation of a Supervised NNSince supervised learning is involved, the system will attempt to match the input with aknown target, such as stock prices or bond ratings. The process begins by assigning randomweights to the connection between each set of neurons in the network. These weights repre-sent the intensity of the connection between any two neurons. Given the weights, the inter-mediate values (in the hidden layer) and then the output of the system are computed. If theoutput is optimal, in the sense that it is sufficiently close to the target, the process is halted; ifnot, the weights are adjusted and the process is continued until an optimal solution is ob-tained or an alternate stopping rule is reached.If the flow of information through the network is from the input to the output, it is known as afeed forward network. The NN is said to involve back-propagation if inadequacies in theoutput are fed back through the network so that the algorithm can be improved. We will referto this network as a feedforward NN with backpropagation (FFNN with BP).2.1.2 Unsupervised NNsThis section discusses one of the most common unsupervised NNs, the Kohonen network(Kohonen 1988), which often is referred to as a self-organizing feature map (SOFM). Thepurpose of the network is to emulate our understanding of how the brain uses spatial map-pings to model complex data structures. Specifically, the learning algorithm develops a map-ping from the input patterns to the output units that embodies the features of the input pat-terns.In contrast to the supervised network, where the neurons are arranged in layers, in the Koho-nen network they are arranged in a planar configuration and the inputs are connected to eachunit in the network. The configuration is depicted in Figure 2.AFIR 2003_Shapiro_19.pdf 3 4. Figure 2: Two Dimensional Kohonen NetworkAs indicated, the Kohonen SOFM may be represented as a two-layered network consisting ofa set of input units in the input layer and a set of output units arranged in a grid called a Ko-honen layer. The input and output layers are totally interconnected and there is a weight as-sociated with each link, which is a measure of the intensity of the link.The sketch of the operation of a SOFM is shown in Figure 3. Figure 3: Operation of a Kohonen NetworkThe first step in the process is to initialize the parameters and organize the data. This entailssetting the iteration index, t, to zero, the interconnecting weights to small positive randomvalues, and the learning rate to a value smaller than but close to 1. Each unit has a neighbor-hood of units associated with it and empirical evidence suggests that the best approach is tohave the neighborhoods fairly broad initially and then to have them decrease over time.Similarly, the learning rate is a decreasing function of time.Each iteration begins by randomizing the training sample, which is composed of P patterns,each of which is represented by a numerical vector. For example, the patterns may be com-posed of stocks and/or market indexes and the input variables may be daily price and volumedata. Until the number of patterns used (p) exceeds the number available (p > P), the patternsare presented to the units on the grid, each of which is assigned the Euclidean distance be-tween its connecting weight to the input unit and the value of the input. This distance isgiven by [Σj ( xj — wij )2]0.5, where wij is the connecting weight between the j-th input unit andthe i-th unit on the grid and xj is the input from unit j. The unit that is the best match to thepattern, the winning unit, is used to adjust the weights of the units in its neighborhood. ForAFIR 2003_Shapiro_19.pdf 4 5. this reason the SOFM is often referred to as a competitive NN. The process continues untilthe number of iterations exceeds some predetermined value (T).In the foregoing training process, the winning units in the Kohonen layer develop clusters ofneighbors, which represent the class types found in the training patterns. As a result, patternsassociated with each other in the input space will be mapped to output units that also are as-sociated with each other. Since the class of each cluster is known, the network can be used toclassify the inputs.2.2 Fuzzy Logic (FL)Fuzzy logic2 (FL), which was formulated by Zadeh (1965), was developed as a response tothe fact that most of the parameters we encounter in the real world are not precisely defined.As such, it gives a framework for approximate reasoning and allows qualitative knowledgeabout a problem to be translated into an executable set of rules. This reasoning and rule-based approach, which is known as a fuzzy inference system, is then used to respond to newinputs.2.2.1 A Fuzzy Inference System (FIS)The fuzzy inference system (FIS) is a popular methodology for implementing FL.3 FISs arealso known as fuzzy rule based systems, fuzzy expert systems, fuzzy models, fuzzy associa-tive memories (FAM), or fuzzy logic controllers when used as controllers (Jang et al. 1997p. 73). The essence of the system can be represented as shown in Figure 4. Figure 4: A Fuzzy Inference System (FIS)As indicated in the figure, the FIS can be envisioned as involving a knowledge base and aprocessing stage. The knowledge base provides the membership functions (MFs) and fuzzyrules needed for the process. In the processing stage, numerical crisp variables are the inputof the system.4 These variables are passed through a fuzzification stage where they are trans-formed to linguistic variables, which become the fuzzy input for the inference engine. Thisfuzzy input is transformed by the rules of the inference engine to fuzzy output. The linguisticresults are then changed by a defuzzification stage into numerical values that become theoutput of the system.AFIR 2003_Shapiro_19.pdf 5 6. 2.3 Genetic Algorithms (GAs)Genetic algorithms5 (GAs) were proposed by Holland (1975) as a way to perform a random-ized global search in a solution space. In this space, a population of candidate solutions, eachwith an associated fitness value, is evaluated by a fitness function on the basis of their per-formance. Then, using genetic operations, the best candidates are used to evolve a new popu-lation that not only has more of the good solutions but better solutions as well.This process, which can be described as an automated, intelligent approach to trial and error,based on principles of natural selection, is depicted in Figure 5. Figure 5: The GA ProcessAs indicated, the first step in the process is initialization, which involves choosing a popula-tion size (M), population regeneration factors, and a termination criterion. The next step is torandomly generate an initial population of solutions, P(g=0), where g is the generation. Ifthis population satisfies the termination criterion, the process stops. Otherwise, the fitness ofeach individual in the population is evaluated and the best solutions are bred with eachother to form a new population, P(g+1); the poorer solutions are discarded. If the new popu-lation does not satisfy the termination criterion, the process continues.2.3.1 Population Regeneration FactorsThere are three common ways to develop a new generation of solutions: reproduction, cross-over, and mutation. Reproduction adds a copy of a fit individual to the next generation.Crossover emulates the process of creating children, and involves the creation of new indi-viduals (children) from the two fit parents by a recombination of their genes (parameters).Under mutation, there is a small probability that some of the gene values in the populationwill be replaced with randomly generated values. This has the potential effect of introducinggood gene values that may not have occurred in the initial population or which were elimi-nated during the iterations. In Figure 5, the process is repeated until the new generation hasthe same number of individuals (M) as the previous one.AFIR 2003_Shapiro_19.pdf 6 7. 3 Market ForecastingMarket forecasting involves projecting such things stock market indexes, like the Standardand Poors (S&P) 500 stock index, Treasury bill rates, and net asset value of mutual funds.The role of SC in this case is to use quantitative inputs, like technical indices, and qualitativefactors, like political effects, to automate stock market forecasting and trend analysis. Thissection provides an overview of representative SC studies in this area.Apparently, White (1988) was the first to use NNs for market forecasting. He was curious asto whether NNs could be used to extract nonlinear regularities from economic time series,and thereby decode previously undetected regularities in asset price movements, such as fluc-tuations of common stock prices. The purpose of his paper was to illustrate how the searchfor such regularities using a feed-forward NN (FFNN) might proceed, using the case of IBMdaily common stock returns as an example. White found that his training results were over-optimistic, being the result of over-fitting or of learning evanescent features. He concluded,the present neural network is not a money machine.Chiang et. al. (1996) used a FFNN with backpropagation (BP) to forecast the end-of-year netasset value (NAV) of mutual funds, where the latter was predicted using historical economicinformation. They compared those results with results obtained using traditional econometrictechniques and concluded that NNs significantly outperform regression models when lim-ited data is available.Kuo et. al. (1996), recognized that qualitative factors, like political effects, always play avery important role in the stock market environment, and proposed an intelligent stock mar-ket forecasting system that incorporates both quantitative and qualitative factors. This wasaccomplished by integrating a NN and a fuzzy Delphi model (Bojadziev and Bojadziev, 1997p. 71); the former was used for quantitative analysis and decision integration, while the laterformed the basis of the qualitative model. They applied their system to the Taiwan stockmarket.Kim and Chun (1998) used a refined probabilistic NN (PNN), called an arrayed probabilisticnetwork (APN), to predict a stock market index. The essential feature of the APN was that itproduces a graded forecast of multiple discrete values rather than a single bipolar output. Asa part of their study, they use a mistake chart, which benchmarks against a constant predic-tion, to compare FFNN with BP models with a PNN, APN, recurrent NN (RNN), and casebased reasoning. They concluded that the APN tended to outperform recurrent and BP net-works, but that case base reasoning tended to outperform all the networks.Aiken and Bsat (1999) use a FFNN trained by a genetic algorithm (GA) to forecast three-month U.S. Treasury Bill rates. They conclude that an NN can be used to accurately predictthese rates.Edelman et. al. (1999) investigated the use of an identically structured and independentlytrained committee of NNs to identify arbitrage opportunities in the Australian All-OrdinariesIndex. Trading decisions were made based on the unanimous consensus of the committeepredictions and the Sharpe Index was used to assess out-of-sample trading performance.Empirical results showed that technical trading based on NN predictions outperformed theAFIR 2003_Shapiro_19.pdf 7 8. buy-and-hold strategy as well as naive prediction. They concluded that the reliability ofthe network predictions and hence trading performance was dramatically enhanced by the useof trading thresholds and the committee approach.Thammano (1999) used a neuro-fuzzy model to predict future values of Thailands largestgovernment-owned bank The inputs of the model were the closing prices for the current andprior three months, and the profitability ratios ROA, ROE and P/E. The output of the modelwas the stock prices for the following three months. He concluded that the neuro-fuzzy ar-chitecture was able to recognize the general characteristics of the stock market faster andmore accurately than the basic backpropagation algorithm. Also, it could predict investmentopportunities during the economic crisis when statistical approaches did not yield satisfactoryresults.Trafalis (1999) used FFNNs with BP and the weekly changes in 14 indicators to forecast thechange in the S&P 500 stock index during the subsequent week. In addition, a methodologyfor pre-processing of the data was devised, which involved differencing and normalizing thedata, was successfully implemented. The text walked the reader though the NN process.Tansel et. al. (1999) compared the ability of linear optimization, NNs, and GAs to model timeseries data using the criteria of modeling accuracy, convenience and computational time.They found that linear optimization methods gave the best estimates, although the GAs couldprovide the same values if the boundaries of the parameters and the resolution were selectedappropriately, but that the NNs resulted in the worst estimations. However, they noted thatnon-linearity could be accommodated by both the GAs and the NNs and that the latter re-quired minimal theoretical background.Garliauskas (1999) investigated stock market time series forecasting using a NN computa-tional algorithm linked with the kernel function approach and the recursive prediction errormethod. The main idea of NN learning by the kernel function is that the function stimulatesto changes of the weights in order to achieve convergence of the target and forecast outputfunctions. He concluded that financial times series forecasts by the NNs were superior toclassical statistical and other methods.Chan et. al. (2000) investigated financial time series forecasting using a FFNN and dailytrade data from the Shanghai Stock Exchange. To improve speed and convergence they useda conjugate gradient learning algorithm and used multiple linear regression (MLR) for theweight initialization. They conclude that the NN can model the time series satisfactorily andthat their learning and initialization approaches lead to improved learning and lower compu-tation costs.Kim and Han (2000) used a NN modified by a GA to predict the stock price index. In thisinstance, the GA was used to reduce the complexity of the feature space, by optimizing thethresholds for feature discretization, and to optimize the connection weights between layers.Their goal was to use globally searched feature discretization to reduce the dimensionality ofthe feature space, eliminates irrelevant factors, and to mitigate the limitations of gradient de-scent. They concluded that the GA approach outperformed the conventional models.Romahi and Shen (2000) developed an evolving rule based expert system for financial fore-casting. Their approach was to merge FL and rule induction so as to develop a system withAFIR 2003_Shapiro_19.pdf 8 9. generalization capability and high comprehensibility. In this way the changing market dy-namics are continuously taken into account as time progresses and the rulebase does not be-come outdated. They concluded that the methodology showed promise.Abraham et. al. (2001) investigated hybridized SC techniques for automated stock marketforecasting and trend analysis. They used principal component analysis to preprocess the in-put data, a NN for one-day-ahead stock forecasting and a neuro-fuzzy system for analyzingthe trend of the predicted stock values. To demonstrate the proposed technique, they ana-lyzed 24 months of stock data for the Nasdaq-100 main index as well as six of the companieslisted therein. They concluded that the forecasting and trend prediction results using the pro-posed hybrid system were promising and warranted further research and analysis.Cao and Tay (2001) used Support Vector Machines (SVMs) to study the S&P 500 daily priceindex. The generalization error with respect to the free parameters of SVMs were investi-gated and found to have little impact on the solution. They conclude that it is advantageousto apply SVMs to forecast the financial time series.Hwarng (2001) investigated NN forecasting of time series with ARMA (p,q) structures. Us-ing simulation and the performance of the Box-Jenkins model as a benchmark, it was con-cluded that FFNN with BP generally performed well and consistently for time series corre-sponding to ARMA(p,q) structures. Using the randomized complete block design of experi-ment, he concluded that overall, for most of the structures, FFNN with BP performed signifi-cantly better when a particular noise level was considered during network trainingAs a follow-up to Kuo et. al. (1996), Kuo et. al. (2001) developed a GA-based FNN (GFNN)to formulate the knowledge base of fuzzy inference rules, which can measure the qualitativeeffect (such as the political effect) in the stock market. The effect was further integrated withthe technical indexes through the NN. Using the clarity of buying-selling points and buying-selling performance based on the Taiwan stock market to assess the proposed intelligent sys-tem, they conclude that a NN based on both quantitative (technical indexes) and qualitativefactors is superior to one based only on quantitative factors.4 Trading RulesIf one dollar were invested in 1926 in 1-month U.S. Treasury bills, it would have grown to$14 by December 1996. If that dollar had been invested in the S&P 500, it would havegrown to $1,370 during that period. If the dollar had been invested with monthly switchingto either Treasury bills or the S&P 500, whichever asset would perform the best during thatmonth, it would have grown to over $2 billion dollars during that period.6 Timing clearly isrelevant and it is not surprising that trading rules have evolved that purport to optimizebuy/sell timing decisions.Of course, the extent to which timing is feasible is controversial. Sharp (1975) was skepticalthat market timing could be profitable and Droms (1989) concluded that successful timingrequires forecasting accuracy beyond the ability of most managers. Nonetheless, researcherscontinue to explore and enhance trading rules, driven, in large part, by the expanding tech-nology. The goal of SC, as it pertains to trading rules, is to create a security trading decisionsupport system, which, ideally, is fully automated and triggered by both quantitative andAFIR 2003_Shapiro_19.pdf 9 10. qualitative factors. This section provides an overview of representative SC studies in thisarea.Kosaka et. al. (1991) demonstrated the effectiveness of applying FL and NNs to buy/sell tim-ing detection and stock portfolio selection. They reported that in a test of their models abil-ity to follow price trends, it correctly identified 65% of all price turning points.Wilson (1994) developed a fully automatic stock trading system that took in daily price andvolume data on a list of 200 stocks and 10 market indexes and produced a set of risk-rewardranked alternate portfolios. The author implemented a five step procedure: a chaos-basedmodeling procedure was used to construct alternate price prediction models based on techni-cal, adaptive, and statistical models; then, a SOFM was used to select the best model for eachstock or index on a daily basis; then, a second SOFM was used to make a short-term gain-loss prediction for each model; then, a trade selection module combined these predictions togenerate buy-sell-hold recommendations for the entire list of stocks on a daily basis; and fi-nally, a portfolio management utility combined the trading recommendations to produce therisk-reward ranked portfolios. He concluded that the stock trading systems could producebetter results than index funds and at the same time reduce risk.Frick et. al. (1996) investigated price-based heuristic trading rules for buying and sellingshares. Their methodology involved transforming the time series of share prices using a heu-ristic charting method that gave buy and sell signals and was based on price change and re-versals. Based on a binary representation of those charts, they used GAs to generate tradestrategies from the classification of different price formations. They used two differentevaluation methods: one compared the return of a trading strategy with the correspondingriskless interest rate and the average stock market return; the other used its risk-adjusted ex-pected return as a benchmark instead of the average stock market return. Their analysis ofover one million intra-day stock prices from the Frankfurt Stock Exchange (FSE) showed theextent to which different price formations could be classified by their system and the natureof the rules, but left for future research an analysis of the performance of the resulting tradingstrategies.Kassicieh et. al. (1997) examined the performance of GAs when used as a method for formu-lating market-timing trading rules. Their goal was to develop a monthly strategy for decidingwhether to be fully invested in a broad based stock portfolio, the S&P 500, or a riskless in-vestment, treasury bills. Following the methodology of Bauer (1994), their inputs were dif-ferenced time series of 10 economic indicators and the GA used the best three of these seriesto make the timing (switching) decision. They benchmarked against the dollar accumulationgiven a perfect timing strategy, and concluded that their runs produced excellent results.As a follow-up study, Kassicieh et. al. (1998) used the same GA with different data transfor-mation methods applied to economic data series. These methods were the singular value de-composition (SVD) and principal component NN with 3, 4, 5 and 10 nodes in the hiddenlayer. They found that the non standardized SVD of economic data yielded the highest ter-minal wealth for the time period examined.Allen and Karjalainen (1999) used a GA to learn technical trading rules for the S&P 500 in-dex using daily prices from 1928 to 1995. However, after transaction costs, the rules did notearn consistent excess returns over a simple buy-and-hold strategy in the out-of-sample testAFIR 2003_Shapiro_19.pdf 10 11. periods. The rules were able to identify periods to be in the index when daily returns werepositive and volatility was low and out of the index when the reverse was true, but these latterresults could largely be explained by low-order serial correlation in stock index returns.Fernandez-Rodriguez et. al. (1999) investigated the profitability of a simple technical tradingrule based on NNs applied to the General Index of the Madrid Stock Market. They foundthat, in the absence of trading costs, the technical trading rule is always superior to a buy-and-hold strategy for both bear and stable markets but that the reverse holds during abull market.Baba et. al. (2000) used NNs and GAs to construct an intelligent decision support system(DSS) for analyzing the Tokyo Stock Exchange Prices Indexes (TOPIX). The essential fea-ture of their DSS was that it projected the high and low TOPIX values four weeks into thefuture and suggested buy and sell decisions based on the average projected value and thethen-current value of the TOPIX. To this end, they construct an (8, 15, 2) FFNN using a hy-brid weight-training algorithm that combines a modified BP method with a random optimiza-tion method. Initially, the buy-sell decision was on an all-or-nothing basis; subsequently, us-ing the GAs, an algorithm was developed for buying or selling just a portion of the shares.They conclude that NNs and GAs could be powerful tools for dealing with the TOPIX.5 Option PricingThis section provides an overview of the use of SC technologies for pricing options. As ex-pected, the Black-Scholes option pricing model was a benchmark for many of the SC solu-tions. On the one hand, the issue was the extent to which the SC out-of-sample performancecould duplicate the Black-Scholes result; on the other hand, the issue was the extent to whichthe SC model could outperform the Black-Scholes model. Another line of inquiry was re-lated to methods for estimating the volatility7 of options, and involved a comparison of im-plied volatility, historical volatility, and a SC-derived volatility. Other topics addressed in-cluded common option features used for SC modeling and specific types of options that havebeen modeled. This section gives an overview of these SC applications.Malliaris and Salchenberger (1994) compared the estimated volatility of daily S&P 100 Indexstock market options using implied volatility, historical volatility, and a volatility based on aFFNN (13-9-1) with BP. They used the following 13 features: change in closing price, daysto expiration, change in open put volume, the sum of the at-the-money strike price and mar-ket price of the option for both calls and puts for the current trading period and the next trad-ing period, daily closing volatility for current period, daily closing volatility for next tradingperiod, and four lagged volatility variables. They concluded that the NN was far superior tothe historical method.Chen and Lee (1997) illustrated how GAs, as an alternative to NNs, could be used for optionpricing. To this end, they tested the ability of GAs to determine the price of European calloptions, assuming the exact price could be determined using Black-Scholes option pricingtheory. They conclude that the results were encouraging.Anders et. al. (1998) used statistical inference techniques to build NN models to explain theprices of call options written on the German stock index DAX. Some insight into the pricingAFIR 2003_Shapiro_19.pdf 11 12. process of the option market was obtained by testing for the explanatory power of several NNinputs. Their results indicated that statistical specification strategies lead to parsimoniousNNs with superior out-of-sample performance when compared to the Black-Scholes model.They further validated their results by providing plausible hedge parameters.Gottschling et. al. (1999) discussed a novel way to price a European call option using a pro-posed new family of density functions and the flexible structure of NNs. The density func-tions were based upon the logarithm of the inverse Box-Cox transform8. Essentially, theyviewed the activation function of a NN as a univariate pdf and constructed their family ofprobability density functions, which have the property of closed form integrability, as theoutput of a single hidden layer NN. Then, observing that the price of a European call optioncould be expressed in terms of an integral of the cumulative distribution function of risk neu-tralized asset returns, they derived a closed form expression from which the free parameterscould then be estimated.Yao et. al. (2000) use a FFNN with BP to forecast the option prices of the Nikkei 225 indexfutures. Different data groupings affected the accuracy of the results and they concluded thatthe data should be partitioned according to moneyness (the quotient of stock prices to strikeprices). Their results suggested that for volatile markets a NN option-pricing model outper-forms the traditional Black-Scholes model, while the Black-Scholes model is appropriate forpricing at-the-money options.Amilon (2001) examined whether a FFNN with BP could be used to find a call option pricingformula that corresponded better to market prices and the properties of the underlying assetthan the Black-Scholes formula. The goal was to model a mapping of some input variableonto the observed option prices and to benchmark against the Black-Scholes model using his-torical and implicit volatility estimates. He found that, although the NNs were superior in thesense that they outperform the benchmarks both in pricing and hedging performances, theresults often were insignificant at the 5% level.6 Bond RatingsBond ratings are subjective opinions on the ability to service interest and debt by economicentities such as industrial and financial companies, municipals, and public utilities. They arepublished by major bond rating agencies, like Moodys and Standard & Poors, who guardtheir exact determinants. Several attempts have been made to model these bond ratings, us-ing methods such as linear regression and multiple discriminant analysis, and in recent yearsSC has been applied to the problem. This section provides an overview of three such SCstudies.Surkan and Ying (1991) investigated the feasibility of bond rating formulas derived throughsimplifying a trained FFNN with BP. Under their method, features are systematically elimi-nated, based on the magnitude of the weights of the hidden layer and subject to error toler-ance constraints, until all that remains is a simple, minimal network. The network weightsthen provide information for the construction of a mathematical formula. In their example,the result of refining the network model was a reduction from the seven features provided inthe original financial data to only the two that contribute most to bond rating estimates. Thederived formula was found to generalize very well.AFIR 2003_Shapiro_19.pdf 12 13. Although not specifically addressing bond ratings, one example of how FL could be appliedto bonds was provided by Hosler (1992 p. 15), who showed how MFs could be used to de-scribe the risk of call of a security. She noted that randomness is associated with the behav-ior of market interest rates and that fuzziness arises from the subjective opinion of the inves-tor. The function could be altered to reflect the desirability of the security based upon the callrisk.Daniels and Kamp (1999) applied NNs to bond rating, with a special emphasis on the flexi-bility of the NNs and their validity, especially when the number of observations is small.Their aim was to establish a general network construction procedure and, to that end, theydiscussed how techniques such as cross-validation and monotonicity analysis9 can be effec-tively combined to optimize the NN. A special class of monotonic NNs and a correspondingtraining algorithm were developed.7 Portfolio ConstructionPortfolio construction is that part of the investment process that involves the determination ofwhich assets to invest in and the proportion of funds to invest in each of the assets. At aminimum, effective portfolio optimization involves simultaneously maximizing the portfolioreturn and minimizing the portfolio risk, subject to various constraints, but it also can involvesuch things as maximizing the possibility of reaching higher returns. This section providesan overview of some of the SC studies in this area.Lowe (1994) demonstrated the use of NNs in two types of capital market problems: effectiveportfolio optimization and short-term prediction of multiple equities. Assuming the existenceof a market portfolio, his first goal was to find an approximating portfolio that minimized therisk, defined in terms of the mean squared error between the market portfolio and the ap-proximating portfolio, subject to constraints, which he transformed into an analytic cost func-tion, and resolved using an analog NN. He viewed short-term equities prediction as a prob-lem in nonlinear multichannel time series forecasting, which can be addressed by a FFNNand resolved using a radial basis function. The network then was used to predict the onestock in the approximating portfolio that would gain the most in the next investment period.Wendt (1995) used a GA to build a portfolio efficient frontier. The underlying data consistedof 250 scenarios of annual returns for eight asset classes. To evaluate the GA process, thefinal GA output was compared to the efficient frontier created by a sophisticated nonlinearoptimizer. After about 50 cycles, the GA found portfolios very close to the efficient frontiergenerated by the nonlinear optimizer.Guo and Huang (1996) used a possibilistic linear programming method for optimal asset al-location based on simultaneously maximizing the portfolio return, minimizing the portfoliorisk and maximizing the possibility of reaching higher returns. This was analogous to maxi-mizing mean return, minimizing variance and maximizing skewness for a random rate of re-turn.AFIR 2003_Shapiro_19.pdf 13 14. The authors conceptualized the possibility distribution of the imprecise rate of return of the i-th asset of the portfolio as the fuzzy number

i = (rip. rim. rio ). where rip. rim. rio were the most rpessimistic value, the most possible value, and the most optimistic value for the rate of re-turn, respectively. Then, taking the weighted averages of these values, they defined the im-precise rate of return for the entire portfolio as

More from this section