An Introduction to RealTime Stock Market Data Processing

Post on: 16 Март, 2015 No Comment

Contents

UPDATES

sourceforge.net/projects/sparkapi/files/MarketDataFiles/ for those who wish to experiment further.

Added explicit instructions for swapping the ‘SparkAPI’ project over to use the 32-bit version of the native spark.dll file.

Introduction

The goal of this article is to introduce the concepts, terminology and code structures required to develop applications that utilise real-time stock market data (e.g. trading applications). It discusses trading concepts, the different types of market data available, and provides a practical example on how to process data feed events into a market object model.

The article is aimed at intermediate to advanced developers who wish to gain an understanding of basic financial market data processing. I recommend that those who are already familiar with trading terminology skip ahead to the Market Data section.

The article is structured as follows:

- An introduction to the concepts, rules and terminology associated with stock markets and trading

- A discussion of market data: the different types, the different grades and its availability

- A walkthrough of code that replays market data events from file, and processes them to generate market data structures (e.g. securities, trade histories) that can be used for higher level processes such as algorithmic trading.

Market Concepts & Terminology

The following section explains the basic terms and concepts related to trading and market data structures. It is framed in terms of the equities (stock) market, but generally applies to most trading markets (e.g. derivatives, commodities, etc.).

Orders & Trades

A trade occurs when a seller agrees to transfer ownership of a specified quantity of stock to a buyer at a specified price. How do buyers and sellers meet? They use a centralised market place called a stock market. People come together and then announce their desire to buy or sell a specific stock; I want to buy 500 shares in BHP for $35.00, I want to sell 2,000 shares in RIO for $65.34. These are called orders. Buy orders are also referred to as bid orders. Sell orders are also referred to as ask or offer orders.

When a buy order’s price is equal to or higher than the lowest priced sell order currently available, a trade occurs. When a sell order’s price is equal to or lower than the highest priced buy order currently available, a trade occurs. This process is also known as a match. because the buy and sell prices must match or cross over for a trade to occur.

What happens if an order is submitted to the market, but it does not match? It is entered into a list of orders called the order book. The order will remain there until the trader cancels it, or it expires (e.g. end of day if it is a day order). Some orders expire immediately after matching against what is available on the order book. These are known as ‘immediate or cancel’ (IOC) or ‘fill and kill’ (FAK) orders. These orders are never entered into the order book regardless of whether they match or not.

Order Book

The order book contains all the offers to buy and sell a particular stock that have not been matched. It’s like the classified advertisements for shares, everyone can see all the offers to buy and sell. The limit order book can be referred to as the book. the depth or the queue .

An order in the book can only be matched against an incoming order if it is the highest priority order (e.g. it must be at the top of the book). It’s like standing in a queue: you must be at the head of the line to get served.

Orders in the order book are ordered by price-time priority. This means that orders are sorted first by price and then by time of submission. The highest priority buy order will be the buy order with the highest price that was submitted first. The highest priority sell order will be the sell order with the lowest price that was submitted first.

Here is an example order book showing two queues of orders in price-time priority: one queue for the buy (bid) orders and one queue for the sell (ask) orders. The time refers to the submission time of each order:

The bid (buy) orders and ask (sell) orders are arranged from top to bottom in priority. The ask orders are orders are ordered by time (earliest first) because they are the same price. Notice that the bid order for 100 shares is higher in priority than the order for 19 shares, even though it was submitted later, because it has a higher price.

Trade Example

I will now step through a trade match using the example order book shown above. Consider that a sell order for 150 shares at 23.34 is submitted into the market (the short-hand for this order would be: Sell 150@23.34). The new order is marked in yellow:

Notice the bid and ask prices are now crossed. After each order is submitted, the exchange checks for crossed prices and then performs the required number of matches to return the market to an uncrossed state. In this case, the exchange matches the 150 sell against the 100 buy at 23.34, generating a trade for 100 share at 23.34 (100@23.34). The remaining 50 share sell order is now the highest priority ask order in the book:

Market Quotes

Often traders are not interested in the entire order book of a stock, but just the highest bid and the lowest ask prices currently in the market, and the quantity available at those prices. All this information together is known as a market quote .

Spread

When a market is in continuous trading (not closed or in an auction state), the bid price must always be lower than the ask price. If they were crossed, a trade would occur. The difference between the lowest ask and highest bid price is called the spread .

Prices to buy and sell can be only changed in specified increments (e.g. you can’t offer to buy at $35.0001). The minimum price change for a stock is called its tick size. Consequently, the minimum spread size for each stock is dictated by its the tick size. The lower the share price of the stock, the smaller the tick size will be. For example, tick sizes on the Australian market are:

- $0.001 for a share price under $0.10

- $0.005 for a share price under $2.00

- $0.01 for a share price equal to or greater than $2.00

Market Data

There are several different ‘grades’ of market data. Data quality is determined by its granularity and its detail.

Granularity

Granularity refers to the observational time interval of the data. Snapshot observations record a particular moment in time (for example daily closing prices, or market quotes for each minute of the day). Event-based observations are recorded each time a relevant field changes (e.g. trade update, change to the order book).

Event-level data sets are superior to snapshot data sets because a snapshot view can always be derived from event data, however the inverse does not hold true. If they are so much better, why is it that all data sets are not supplied in event form? A few reasons. They are big to store, harder to code against, they are slower to process and not everyone needs this level of detail.

Detail

Detail refers to what information is contained in the data set. There are three levels of market data detail: trades, quotes and depth. Trade & quote updates combined together are often referred to as Level 1 (L1) data. Depth updates are referred to as Level 2 (L2) data.

Trade Updates

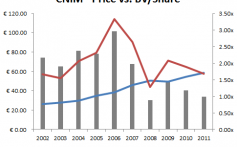

The simplest level of detail occurs in the form of trade prices (e.g. daily closing prices). These are widely available and are often used by retail investors to select a stock to buy and hold for a longer investment period (e.g. months, years).

Below is a sample of daily closing prices for BHP traded on the ASX:

A big step up from daily closing prices is intraday trade history (also known as tick data), which contains a series of records detailing every trade that occurred for a stock. A good trade data set will contain the following fields:

- Symbol — Security symbol (e.g. BHP)

- Exchange — Exchange the trade occurred on (e.g. ASX, CXA)

- Price — Transaction price

- Quantity — Transaction quantity

- Time — Transaction date and time (this will be in milliseconds or microseconds if it’s a good dataset)

- Trade Type (Condition Codes) — What type of trade it was (e.g. standard, off-market trade report, booking purpose trade)

Below is a sample of intraday trade records for FMG (Fortescue Metals Group) traded on the ASX for the 30th Sep 2011:

NOTE: The ‘XT’ flag indicates that the trade was a crossing. This occurs when the same broker executes both sides of the trade (e.g. one client is buying through the broker and another is selling).

Intraday trade records are often used in retail trading software for intraday charting and technical analysis. Sometimes people will attempt to backtest a trading strategy (backtest means to evaluate performance using historical data) using trade records. Don’t do this if you are testing intraday strategies, you’re results will be useless because the trade price won’t always reflect the actual price you could buy or sell for at that time.

The charts displayed by Google Finance for a security are generated using intraday trade records (with the trade time on the x-axis and trade price on the y-axis).

Quote Updates

The next step up in market data detail is the inclusion of market quote updates. A good market quote data set will contain a record of the following fields every time there is a change:

- Symbol — Security symbol (e.g. BHP)

- Exchange — Exchange the quote is from

- Time — Quote update time

- BidPrice — Highest market bid price

- BidQuantity — Total quantity available at market bid price

- AskPrice — Lowest market ask price

- AskQuantity — Total quantity available at market ask price

Some quote data sets will provide these additional fields, which are useful for certain types of analytics, but not particularly relevant for backtesting:

- BidOrders — Number of orders at market bid price

- AskOrders — Number of orders at market ask price

Below is a sample of real-time quote update events for NAB (National Australia Bank) on the 31st Oct 2012:

A good quality market quote dataset is all that is required for back-testing intraday trading strategies, as long as you are only planning to trade against the best market price rather than post orders in the order book and waiting for fills (putting aside issues of data feed latency, execution latency and other more advanced topics for now).

Depth Updates

The final level of market data detail is the inclusion of market depth updates. Depth updates contain a record of every change to every order in the order book for a particular security. Depth data sets can often be limited. Some depth data sets only provide an aggregated price view, the total quantity and number of orders at each price level. Others will only provide the first few price levels.

A good depth update data set will contain a record of the following fields every time one of them changes:

- Symbol — Security symbol (e.g. BHP)

- Exchange — Exchange the update is from

- Time — Order update time

- Order Position OR Unique Order Identifier — This identifies which order has been changed (either by relative position in the queue, or by an order Id)

- Quantity — Order quantity

- Update Type — NEW or ENTER (a new order has been entered), UPDATE (volume has been amended) or DELETE (order has been removed)

Market depth updates are required for accurately back-testing intraday trading strategies where you are submitting orders that will enter the order book queue rather than executing immediately at the best market price.

Data Availability

Market data comes in two forms: live & historical. A live market data feed is required for trading. Historical data sets are used for analysis and back-testing. Historical daily closing prices are publicly available for free from a variety of sources (such as Google Finance). Most data and trading software vendors can provide historical intraday trade data for a specified time window (e.g. 6 months). For example, you can access recent historical daily closing prices for BHP (BHP Billiton) from Google Finance .

Live intraday trade data can also be accessed on the internet for free, but it is often delayed (20 minutes is standard) to prevent users from trading with it. Non-delayed live intraday trade data should be available through any trading software vendor for a modest price. All good trading software vendors will provide live quote and trade data via their user interface. Some higher-quality vendors will provide quote and trade (Level 1) intraday data live via an API (e.g. Interactive Brokers). Historical Level 1 data can be harder to acquire but is available through some vendors.

Live depth updates (Level 2) are very commonly accessible via the trading software user interface using the security depth view, but you don’t see the update event details, just an up to date version of the order book. However, it is very rare to be able to access this data via an API.

Historical Level 2 update records are virtually impossible to acquire as a retail investor, and are generally only kept by research institutions (e.g. SIRCA) or privately recorded by trading institutions (e.g. investment banks, market makers, high-frequency trading (HFT) groups) for their own use.

Code Example

The figure below displays the basic flow of market data processing:

This code example is concerned with the first two layers; the receiving of events and the processing into an object model that can be used by higher-level processes.

The following sections of the code example are structured as follows:

- Market Data Interface — A discussion of the market data source used in the code example

- Reading Events — How to setup and execute a market data feed replay from file

- Event Procesing — How to process the events into an object model

The Market Data Interface

While different data feeds will have their own format (e.g. overlayed C structures via an API, FIX like messages in fixed-length strings), they all contain a similar set of information.

iguana2.com/spark-api

One of the useful things about the API is that it standardises and interleaves the market data feeds from different exchanges into a unified view of the market. For example, the ASX market data interface comes in via a C-based component called Trade OI which is based off Genium INET technology from the NASDAQ. Australia’s secondary exchange, Chi-X, provides a market data feed via fixed length string stream that used tag-value combinations in a semi-FIX like structure.

For those interested in learning about coding against an exchange market data feed, the Spark API provides the closest equivalent I’ve encountered that is available to retail investors. With the extensions I’ve written in the Spark API SDK, it can run historical data files in off-line mode without requiring a connection to the Spark servers.

If you are interested in seeing what an institutional grade market data feeds look like, here are some links to institutional vendor and exchange trade feed specifications I’ve compiled:

The Spark API SDK is a C# component I’ve written to provide easy access to the Spark API, and smooth over the quirks that come from accessing a native-C component via .NET. In addition, it includes the classes required to process and represent the event-feed in a form that is useful for higher-level logic such as trades, orders, order depth and securities.

The SparkApi C# component contains three primary namespaces:

- Data — Contains all the logic for executing queries and establishing live data feeds from the Spark API, and loading and replaying historical event data files

- Market — Contains classes used to represent market related objects such as trades, limit orders, order depth and securities.

- Common — Contains general functions related to file management, serialisation, logging, etc.

I’ll refer to specific classes from these namespaces in the following sections as we examine the concepts related to market data processing. The code in the Spark API SDK will be used as the example of how to access and process a market data feed.

UPDATE: While there is a sample market data file included in the source code package, I’ve made additional market and security event data files availabled for download here for those who wish to experiment further.

IMPORTANT: While the SparkAPI component in the SDK references the ‘Spark.Net.dll’ .NET library to access the Spark API, ‘Spark.Net.dll’ is actually an interop wrapper to a C library called ‘spark.dll’. As the C library is not a COM object, it cannot be referenced directly. There are 32-bit and 64-bit versions of the spark.dll included in the download, however the solution is setup by default to utilise the 64-bit version. If you are running on a 32-bit machine, please follow the instructions below to swap the SparkAPI project over to use the 32-bit version.

INSTRUCTIONS:

In the Spark API project:

- Select ‘References’, then delete the ‘Spark.Net’ reference.

- Right-click on ‘References’ and select ‘Add Reference’.

- Navigate to the Spark API binaries folder, selecting the correct OS version (32-bit or 64-bit), and then select ‘Spark.Net.dll’ (The default location in the sample is AssembliesSpark).

- Delete the ‘spark.dll’ file in the SparkAPI project.

- Right-click on the ‘SparkAPI’ project and select ‘Add->Add Existing Item. ‘

- Navigate to the Spark API binaries folder, selecting the correct OS version (32-bit or 64-bit), and then select ‘spark.dll’ (The default location in the sample is AssembliesSpark).

- Right-click on the file in the SparkAPI project, and select ‘Properties’.

- Set the property ‘Copy To Output Directory’ equal to ‘Copy If Newer’.

In this code example, we will be processing market data updates from the Spark.Event structure supported by the Spark API.

Below is a class diagram showing the Spark.Event struct in its C# form (rather than native C form):

The following fields are relevant to all message types:

- Time — Unix time (the number of seconds since 1-Jan-1970)

- TimeNsec — Nanoseconds (not currently populated for data stream compression reasons)

- Code — Security code (e.g. BHP) (will have a _CX suffix if from Chi-X)

- Exchange — Security exchange

- Type — Event type code (maps to the Spark.EVENT_X constant list shown above)

The other fields are only relevant to some event types:

- Trade update (EVENT_TRADE, EVENT_CANCEL_TRADE):

- Price — Integer representation of trade price

- Volume — Trade quantity

- Condition Codes — Trade type (e.g. XT, CX)

- Depth update (EVENT_AMEND_DEPTH, EVENT_NEW_DEPTH, EVENT_DELETE_DEPTH):

- Price — Integer representation of order price

- Volume — Order quantity

- Flags — Contains bit flag indicating order side

- Position — Index position of order in the bid or ask depth queue

- Market state update (EVENT_STATE_CHANGE):

- State — Market state type (const list is contained in the Spark API)

Stock market related applications often perform comparison operations on prices, for example, comparing an aggressive market order price against a limit price in the order book to determine if a trade has occurred. As prices are expressed in dollars and cents, prices are normally represented in code as a floating point number (float or double). However, comparisons using floating point numbers are error prone (e.g. 36.0400000001 != 36.04) and yield unpredictable results.

To avoid this issue, stock market related applications often internally convert prices into an integer format. Not only does this ensure accurate comparison operations, but it also reduces the memory footprint and speeds up comparisons (integer operations are faster on a CPU than floating point operations).

The sub-dollar section of prices are retained in an integer format by multiplying the price by a scaling factor. For example, the price 34.25 would become 342500 with a scaling factor of 10,000. Four decimal places are sufficient to represent the valid range of tick prices in the Australian market, as the smallest price would be a mid-point trade on a sub $0.10 stock with a tick of 0.001 (e.g. Bid=0.081 Ask=0.082 Mid=0.0815).

An accuracy of four decimal places works for market prices, however calculation of values such as average execution price on an order are better stored in a double or decimal type as they do not require accurate comparisons.

Reading Events

The following steps are required are required to process a Spark data feed:

- Initialise data feed connection and login to the Spark server (not required if replaying from file)

- Subscribe to the event-feed for a specified security or exchange

- Create instances of the Security class to process the market data events

The Spark API SDK does a lot of this work automatically for you.

Here is some example code that replays a stock data file, processes the events in a security and writes the trade and quote updates to console:

Let’s drill down into this and see what is happening.

The first step is to read the events from the market data file. Obviously, this step is not required when you are connected to a market data server that is delivering the events via an API. Here are the event feed structures available in the SDK shown in a class diagram:

The ApiSecurityEventFeed and ApiMarketEventFeed classes are used when receiving live market data. As we are replaying from file, we’ll use the ApiEventFeedReplay class. When the ApiEventFeedReplay.Execute() method is called, it starts streaming lines from the event file and parsing them into the required data struct:

The ApiEventReaderWriter class contains all the logic required to read and write Spark events to file. We stream the events from file one at a time rather than reading them all at once into memory because loading every event from the exchange into memory before processing will generate an out-of-memory exception on a 32-bit build. It is also much faster.

Each line read from file is parsed into a Spark.Event struct using the SparkAPI.Data.ApiEventReaderWriter.Parse() method and then passed to the event processing method specified in the StreamFromFile command. In the case of a replay from file, this will be the ApiEventFeedReplay.EventReceived() method, which in turn calls the ApiEventFeedBase.RaiseEvent() method. The ApiEventFeedBase.RaiseEvent() method is where the replay and live event feed code paths align. All feed associated classes ( ApiEventFeedReplay. ApiMarketEventFeed. ApiSecurityEventFeed ) inherit from ApiEventFeedBase .

Let have a look and see what it does:

The EventFeedArgs contains a reference to the Spark.Event struct, a time-stamp for the event, and a symbol and exchange identifier. The ApiEventFeedBase class supports two mechanisms to propagate events:

- Directly by calling its own OnEvent event, or

- By calling the EventReceived() method of any securities that have been associated with the event feed.

The Security dictionary lookup allows the event feed to pipe event updates to the correct security and ignore the rest.

In order to make this example easier to understand and step through via debugging, I’ve kept the entire market data processing sequence in a single thread. In practice, different tasks such as market data event processing and analytics are often allocated to different threads or different processes. This is a topic for a separate article.

If you’re interested, there is an event replay feed in the SDK using a multi-threaded implementation called SparkAPI.Data.ApiEventFeedThreadedReplay. This implements a producer-consumer pattern where the producer thread streams the events from file, parses them into structs and adds them to a concurrent queue. The consumer thread dequeues the event using a blocking collection and performs further processing.

Processing Events

So how do we represent all this market data in a useful object model? We need a Security class.

It contains the following properties:

- Symbol — The unique exchange symbol used to identify the security (e.g. BHP, NAB)

- MarketState — The current market state of the security (e.g. pre-open, auction, open, closed, etc.)

- Trades — List of all trades that have occurred for the stock for the day

- OrderBooks — A set of limit order books (depth) for the security, where each separate trading venue has its own order book.

The classes associated with representing the security object model are shown below:

The most complex area of the Security class relates to updating order depth in the LimitOrderBook class. We need to maintain the current order depth for each venue we receive data from. In Australia, there are two trading venues: the Australian Stock Exchange (ASX) and Chi-X Australia (CXA). As the Security class receives events for both venues, we store multiple LimitOrderBook classes in the OrderBooks dictionary, using the exchange ID (e.g. ASX, CXA) as the dictionary key.

A LimitOrderBook class contains two LimitOrderList classes ( Bid and Ask ), which represent the bid and ask order queues. The LimitOrderList is a wrapper for a List

Once events reach the Security object, they need to be interpreted to update the Security data objects and fields. Here is the method that processes the events:

Trades, quotes and market state updates only require the conversion of the information in the event struct into an C# equivalent object, and then update the relevant property (for market state and quote) or list (for trades). Updating the limit order book entries is more complex, so we’ll examine that in detail.

In the LimitOrderBook.SubmitEvent() method, we determine whether we should add it to the Bid or Ask queue:

A lock is used when submiting an event as the limit order book queues may be traversed by other threads that require the information.

Once we have a reference to the correct LimitOrderList object, its SubmitEvent() method is called:

The Position field in the event struct is the key to determining where the action should occur. For ENTER orders, it provides the insertion position, and for AMEND or DELETE orders, it provides a reference to the correct order. Note that Position uses a base 1 rather than base 0 reference point.

Some data feeds may not provide a position value, but a unique order identifier for a depth update. In this case, you will need to determine the correct location of the order based on time-price priority rules for ENTER orders, and use the order ID via a hashtable lookup to locate orders when amending or deleting.

Final Thoughts

There are many topics in this article I feel should be discussed in more detail, such as synchronising event processing across multiple threads and the impact that latency has on processing market data for backtesting. There is also the question of what you do with the market data, covering areas such as metrics, trading strategies and the complex area of order state management. However, I’m hopeful that I have provided an introduction to the concepts and data structures involved, and given some code examples and sample market data to those interested in experimenting further.

Please feel free to post any comments, questions or suggestions you may have.

History

Version 1.0 — 27-Feb-2013 — Initial versionVersion 1.1 — 04-Mar-2013 — Minor editing, added links to additional event data files