Genetic Programming and Boosting Technique to Improve Time Series Forecasting

Post on: 16 Март, 2015 No Comment

[1] Federal University of Parana, Brazil

1. Introduction

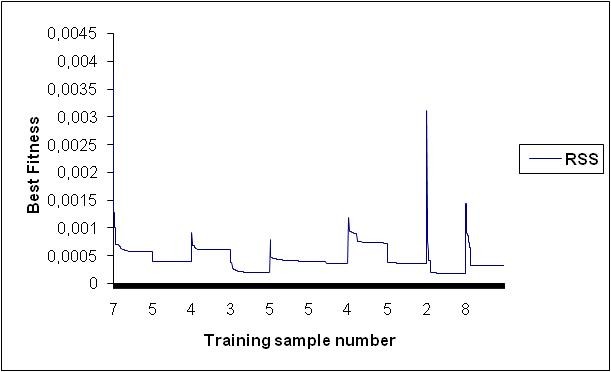

An essential element for many management decisions is an accurate forecasting. There are several methods and techniques to forecast time series that include traditional forecasting techniques with theoretical foundations in statistics. These methods present some obstacles and complexities to overcome; one of the most important ones is the difficulty to select the model that can provide the best adjustment for a specific dataset, many attempts have to be usually done until the best model can be obtained. Considering this scenario, different machine learning techniques have been recently used in this problem, such as Artificial Neural Network (ANN), Evolutionary Computation (EC), in particular, Genetic Programming (GP), which is considered a promising approach to forecast noisy complex series (Kaboudan, 2000 ), there are many other works founded in the literature that use (GP) to Time Series Prediction. On the other hand, recently advances in the machine learning field show that the application of the Boosting algorithm is a powerful approach to increase the accuracy of forecasting methods. Boosting algorithm was proposed and developed by Freund and Schapire (1996). According to Allwein et al. (2000). Boosting is a method of finding a highly accurate hypothesis by combining many weak hypotheses, each of which is only moderately accurate. Paris et al. (2004) proposed GPBoost that uses the Boosting algorithm with the GP as base learner. We have proposed a new formula for the updating of the weights and for obtain the final hypothesis of the predictor. This algorithm was called of Boosting Correlation Coefficients (BCC) and it is based on the correlation coefficient instead of the loss function used by traditional Boosting algorithms. To evaluate this approach we conducted three experiments. In the first one, the BCC was used to forecast real time series, in this experiment the mean squared error (MSE) has been used to compare the accuracy of the proposed method against the results obtained by GP, GPBoost and the traditional statistical methodology (ARMA). In the second, to prove the efficiency of the proposed methodology a widespread Monte Carlo simulation was done covering the entire ARMA spectrum, in which artificial series were generated from the parametric space of the principal ARMA models, they are AR(1), AR(2), MA(1), MA(2) e ARMA(1,1). The database generated was composed by 214.000 time series with 150 observations each one. The training set was composed by 90% of date and the others 10% composes the test set. The results were compared out of sample and the BCC showed better performance than ARMA methodology, Genetic Programming and GPBoost. Finally, the BCC algorithm was also applied to multiple regressions problem and the results obtained from this method were compared with the results from Artificial Neural Network, Model Tree and Boosting. This comparison showed that the BCC supplied better results than other ones. In way compare the performance of the BCC methodology with other methods, many statistical tests were performed such as Median Square Error (MSE), Root Median Square Error (RMSE) and a non parametric test Friedman. The results were compared out of sample and the BCC methodology had been presented accurate forecasts. Future research Considering that GP is able to provide solutions of high quality, and after the success of our own experiments (Souza et al. 2007a). we are encouraged to further explore GP towards finding solutions to the problem of modeling and pattern discovery of complex time series and in additional we will investigate the procedure BCC using GP as a base learner to analyze probabilistic and stochastic processes. We will investigate new tools that can work GP to more effectively solve this problem. One of the most important applications for the time series analysis is in stock markets. The goal of this task is to choose the best stocks when making an investment, and to decide which is the best strategy at the moment. Therefore, we will investigate the appropriate means for using GP in this task, as well as other general problems in financial time series. An another application that we must investigate is in Biological Networks, for example, gene regulatory network.

2. Genetic programming

Genetic Programming (GP) is an Evolutionary Computation Technique in which the individuals are computational programs. This theory was developed by John Koza (1992) and it is based on Genetic Algorithm (GA) presented by John Holland (1975). In accordance to Banzhaf (1998) and Kaboudan (2000) GP is known as an effective research paradigm in Artificial Intelligence and Machine Learning, and have been studied in the most diverse areas of knowledge, such as: digital cirucuits, data mining, molecular biology, optimization taks and another ones. In nature, those individuals that better adapt to the environment that surrounds them, have greater chance to survive. They pass their genetic characteristics to their descendents, who will suffer modifications to better adapt to the environment. After many generations, this population reaches a natural evolution. In Genetic Programming (GP), the evolutionary algorithm operates over a population of programs that have different forms and sizes. The initial population must have enough diversity, that is, the individuals must have most of the characteristics that are necessary to solve the problem, because characteristics that do not exist in the initial population will probably not appear during the evolutionary process. The evolutionary process is guided by a fitness function that measures the individual’s ability to solve the problem. Those individuals that better solve the problem will receive a better fitness value and consequently, will have a better chance to be selected for the next generation. The choice of this function depends on the domain of the problem. A good choice is essential to provide good results. Once the individuals are selected, it is time to apply the genetic operators. These are: Reproduction – an individual is replicated to the next generation, with no modification in its structure; Crossover – two programs are recombined to generate two offspring and Mutation – a new sub-tree replaces a randomly selected part of a program. This process is repeated until a satisfactory solution or a stop criterion is reached. Instead of a population of beings, GP works with a population of computer programs. The goal of the GP algorithm is to select, through recombination of “genes”, the program that better solves a given problem. The main elements of GP are:

Program Structure: a tree is the most used structure to represent programs in GP. Each node can be a function or a terminal. A function has to be evaluated considering its parameters while a terminal has its own value. The terminal (T) and function (F) datasets must be provided by the user in accordance to the current problem. For example, if the datasets are: F = <+, −, *, /> and T = are one simple variable arithmetic expression can be generate, such as x * x + 2 or (x 2 +2). Figure 1 shows the abstract syntax tree for that expression.

Fitness Function and Selection: in nature, individuals are selected based on how well they fit to the environment. The individuals that are able to better solve the problem have better chance to be selected.

Parameters: there are some parameters that will guide the evolutionary process, these parameters will limit and control the search performance. Some of them are: genetic operators rates (crossover rate, mutation rate), population size, selection rate (tournament size), maximum depth of the individual, etc.

Figure 1.

Sintax tree for (x*x+2).

In GP the population is composed by individuals that are computational programs (Koza, 1992 ). The first step of the algorithm is to create randomly the initial population that is the Generation 0. After that, there are two majors tasks processed in a loop with two main steps:

The evaluation of each program by using a fitness function: the GP algorithm receives the set that includes the values that represent the solution for the problem. For example, in a Symbolic Regression problem, it is necessary to provide the set of values of x and f (x) to the GP algorithm. These values are applied to the programs generated with the defined sets of operators and terminals. At the end, the fitness value is obtained.

The new population is created by selecting individuals that have better fitness value and by applying the genetic operators.

Pseudo code of Genetic Programming.

Each run of this loop represents a new generation of individuals, that are the new population that will substitute the previous one. This process is repeated until a solution is found or until a maximum number of generations is reached. At the end, the GP algorithm presents the best tree that is able to solve the given problem in the best way. The pseudo code of the GP algorithm is showed in the Figure 2 .

3. Boosting algorithms

The Boosting algorithm was proposed and developed by Freund and Schapire (1996 ) for binary problems. According to Schapire and Singer (1997) Boosting is a method to find a highly accurate hypothesis by combining many weaker hypotheses, each of which is only moderately accurate. It manipulates the training examples to generate multiple hypotheses. In each iteration the learning algorithm uses different weights on the training examples and returns a hypothesis h t . The weighted error of h t is computed and applied to update the weights on the training examples. The result of the change in weights is to place higher weights in training examples that were misclassified by h t . and lower weights on examples that were correctly classified. The final classifier is composed by the weighted vote of the individual classifiers. Freund and Schapire (1996) introduced the AdaBoost algorithm commonly referred to as the Discrete Boosting algorithm, Ridgeway (1999) developed for binary classification problems. Freund and Schapire (1997) extended AdaBoost to a multi-class case, which they called AdaBoost.M1 and AdaBoost.M2. In order to solve regression problems, Schapire (2002) extended the AdaBoost.M2 and called it AdaBoost.R. It solves regression problems by reducing them to classification ones. Drucker (1997) developed AdaBoost.R2 algorithm, which is an ad hoc modification of AdaBoost.R. He carried out some experiments with AdaBoost.R2 for regression problems and obtained some promising results (Solomatine and Shrestha, 2004 ). All these approaches follow the view of boosting as a gradient descent procedure (Breiman, 1997 ), or as residual-fitting, (Buhlmann and Yu, 2003 ), (Mason et al. 1999 ). Instead of being trained on a different sample of the same training set, as in previous boosting algorithms, a regressor is trained on a new training set that has different target values (e.g. the residual error of the sum of the previous regressor) (Assad and Bone, 2003 ). Bone et al. (2003) adapted a Boosting algorithm to time series forecasting using neural networks as base learners. Their experiments showed that Boosting actually provides improved results in regression problems. Iba (1999) proposed a version of AdaBoost for GP and regression. In his work the distribution was implemented picking up examples to generate a new learning set for each Boosting round. The probability of an example being picked up is proportional to its weight, and any example can be picked from 0,1 up to several times. According to Paris (2002). this approach makes the implementation easier, but the precision of weights is somewhat lost in the process.

3.1. GPBoost

Paris proposed a Boosting method that retains the precision of weights and operates on the whole set of examples for every round of Boosting. Their algorithm, named GPBoost, uses a weighted based fitness function in GP and the generic AdaBoost.R2 algorithm to update the weights its pseudo code is showed in the figure 3. The GP technique allows us to obtain accurate models from different datasets with diverse characteristics, and from the obtained model to estimate or predict the behavior of the system along time. On the other side, using Boosting, the results obtained with the merging hypothesis are always better than the results obtained with GP technique.