Credit Scoring Scorecard Statistics Risk Management

Post on: 25 Май, 2015 No Comment

Overview

Credit scoring is perhaps one of the most classic applications for predictive modeling. to predict whether or not credit extended to an applicant will likely result in profit or losses for the lending institution. There are many variations and complexities regarding how exactly credit is extended to individuals, businesses, and other organizations for various purposes (purchasing equipment, real estate, consumer items, and so on), and using various methods of credit (credit card, loan, delayed payment plan). But in all cases, a lender provides money to an individual or institution, and expects to be paid back in time with interest commensurate with the risk of default.

Credit scoring is the set of decision models and their underlying techniques that aid lenders in the granting of consumer credit. These techniques determine who will get credit, how much credit they should get, and what operational strategies will enhance the profitability of the borrowers to the lenders. Further, they help to assess the risk in lending. Credit scoring is a dependable assessment of a person’s credit worthiness since it is based on actual data.

A lender commonly makes two types of decisions: first, whether to grant credit to a new applicant, and second, how to deal with existing applicants, including whether to increase their credit limits. In both cases, whatever the techniques used, it is critical that there is a large sample of previous customers with their application details, behavioral patterns, and subsequent credit history available. Most of the techniques use this sample to identify the connection between the characteristics of the consumers (annual income, age, number of years in employment with their current employer, etc.) and their subsequent history.

Typical application areas in the consumer market include: credit cards, auto loans, home mortgages, home equity loans, mail catalog orders, and a wide variety of personal loan products.

Classic Credit Scoring, Credit Score Cards

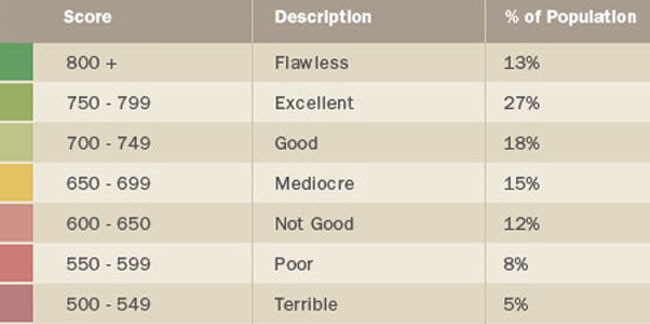

The classic and still widely used (and useful) approach for evaluating credit worthiness and risk is based on the building of scorecards; a typical scorecard may look like this:

Note that this is an actual screenshot taken from STATISTICA Scorecard . For each predictor Variable , specific data ranges or categories are provided (e.g. Duration of Credit ), and for each specific category (e.g. Duration of Credit between 9 and 15 years), a Score is provided in the last column. For each applicant for credit, the scores can be added over all the predictor variables and categories, and based on the resulting total credit score, a decision can be made whether or not to extend credit.

Some Characteristics of Classic Credit Scorecards; Models

There are several aspects of the particular modeling workflow for producing a scorecard, and for using it effectively.

Coarse Coding (Discretizing) Predictors

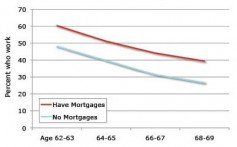

First, in order to make a scorecard effective, it needs to be easy to use. Often, the decision to extend or deny credit must be made very quickly so as not to jeopardize a deal (e.g. selling a car). If a decision to extend credit takes too long, then the applicant might look elsewhere for financing. Therefore, and in the absence of automated scoring solutions accessible for example via a web page, a scorecard needs to make it easy for it’s user to determine the individual components contributing to the overall score and credit decision, and to achieve that, it is useful to divide the values of each continuous or categorical predictor variable into a relatively small number of categories so that an applicant can be quickly scored. For example, a variable Age of Applicant could be quickly coded into 4 categories (20-30. 30-40. 50-60. 60+ ), and the appropriate scores associated with each category typed into a spreadsheet to compute the final score.

There are a number of methods and considerations that enter into the decision how to re-code variable values into a smaller number of classes. In short, it is desirable (again, from the perspective of making it simple-to-use) that the credit score and credit risk across the coded classes for a predictor is a monotone increasing or decreasing function. So for example, the more debt an applicant currently carries the greater is the risk of default when additional credit is extended.

Typically, during the score card building process the coarse-coding of predictors is a manual procedure where predictors are considered one-by-one based on a training data set of previous applicants with known quality characteristics (e.g. whether or not the credit was paid back). The result of this process is a set of variables that enter into subsequent predictive modeling, as recoded (coarse-coded) predictors.

Model Building

When the training data set on which the modeling is based contains a binary indicator variable of Paid back vs. Default, or Good Credit vs. Bad Credit, then Logistic Regression models are well suited for subsequent predictive modeling. Logistic regression yields prediction probabilities for whether or not a particular outcome (e.g. Bad Credit ) will occur. Furthermore, logistic regression models are linear models. in that the logit -transformed prediction probability is a linear function of the predictor variable values. Thus, a final score card model derived in this manner has the desirable quality that the final credit score (credit risk) is a linear function of the predictors, and with some additional transformations applied to the model parameter, a simple linear function of scores that can be associated with each predictor class value after coarse coding. So the final credit score is then a simple sum of individual score values that can be taken from the scorecard (as shown earlier).

A note on Reject Inference

The term Reject Inference describes the issue of how to deal with the inherent bias when modeling is based on a training dataset consisting only of those previous applicants for whom the actual performance (Good Credit vs. Bad Credit ) has been observed; however, there are likely another significant number of previous applicants, that had been rejected and for whom final credit performance was never observed. The question is, how to include those previous applicants in the modeling, in order to make the predictive model more accurate and robust (and less biased), and applicable also to those individuals.

This is of particular importance when the criteria for the decision whether or not to extend credit need to be loosened, in order to attract and extend credit to more applicants. This can for example happen during a severe economic downturn, affecting many people and placing their overall financial well being into a condition that would not qualify them as acceptable credit risk using older criteria. In short, if nobody were to qualify for credit any more, then the institutions extending credits would be out of business. So it is often critically important to make predictions about observations with specific predictor values that were essentially outside the range of what would have been previously considered, and consequently is unavailable and has not been observed in the training data where the actual outcomes are recorded.

There are a number of approaches that have been suggested on how to include previously rejected applicants for credit in the model building step, in order to make the model more broadly applicable (to those applicants as well). In short, these methods come down to systematically extrapolating from the actual observed data, often by deliberately introducing biases and assumptions about the expected loan outcome, had the (in actuality not observed) applicant been accepted for credit.

Model Evaluation

Once a (logistic regression) model has been built based on a training data set, next the validity of the model needs to be assessed in an independent holdout or testing sample, for exactly the same reasons and using the same methods as is typically done in most predictive modeling. All of these methods, graphs, and statistics that are typically computed for this purpose evaluate the improved odds for differentiating the Good Credit applicants from the Bad Credit applicants in the holdout sample, compared to simply guessing or some other methods for making the decision to extend or deny credit.

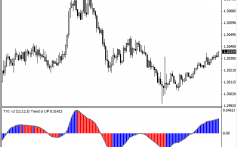

Useful graphs include the lift chart. Kolmogorov Smirnov chart, and other ways to assess the predictive power of the model. For example, the following graphs shows the Kolmogorov Smirnov (KS) graph for a credit scorecard model.

In this graph, the X axis shows the credit score values (sums), and the Y axis denotes the cumulative proportions of observations in each outcome class (Good Credit vs. Bad Credit ) in the hold-out sample. The further apart are the two lines, the greater is the degree of differentiation between the Good Credit and Bad Credit cases in the hold-out sample, and thus, the better (more accurate) is the model.

Determining Score Cutoffs

Once a good (logistic regression) model has been finalized and evaluated, the decision has to be made where to put the cutoff values for extending or denying credit (or where more information should be requested from the applicant to support the application). The most straightforward way to do this is to take as a cutoff the point at which the greatest separation between Good Credit and Bad Credit cases is observed in the hold-out sample, and thus can be expected. However, many other considerations typically enter into this decision.

First, default on a large amount of credit is worse than on a small amount of credit. Generally, the loss or profit associated with the 4 possible outcomes (correctly predicting Good Credit. correctly predicting Bad Credit. incorrectly predicting Good Credit. incorrectly predicting Bad Credit ) needs to be taken into consideration, and the cutoff should be selected to maximize the profit based on the model predictions of risk. There are a number of methods and specific graphs that are typically prepared and consulted to decide on final score cutoffs, all of which deal with assessing the expected gains and losses with different cut off values.

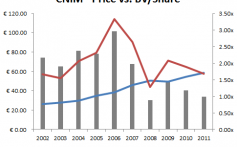

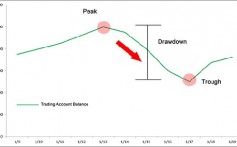

Monitoring the Score Card, Population Stability, Score Card Performance, and Vintage Analysis (Delinquency Reports)

Finally, once a score card has been finalized and is being used to extend credit, it obviously needs to be monitored carefully to verify the expected performance. Fundamentally, three things can change:

First, the population of applicants may change with respect to the important (used in the score card) predictors. For example, the applicant pool may become younger, or may show fewer assets than the applicant pool described in the training data from which the score card was built. This will obviously change the proportion of applicants for credit who will be acceptable (given the current scorecard), and this may well change where the best score cutoff should be set. So-called population stability reports are used to capture and track changes in the population of applications (the composition of the applicant pool with respect to the predictors).

Second, the predictions from the scorecard may become increasingly inaccurate. Thus, the accuracy of the predictions from the model must be tracked, to determine when a model should be updated or discarded (and when a new model should be built).

Third, the actual observed rate of default (Bad Credit ) may change over time (e.g. due to economic conditions). Such changes will necessitate adjustments to the cutoff values, and perhaps scorecard model itself. The methods and reports that are typically used to track the rate of delinquent loans, and the comparison to the expected delinquency, are called Vintage Analysis or Delinquency Reports/Analysis .

Other Methods for Building Scorecards

The traditional method for building scorecards briefly outlined above is still widely in use, because it has a number of advantages with respect to the interpretability of models (and thus ease with which decisions regarding whether or not to extend credit can be explained to applicants or regulators); also, they often provide sufficient predictive accuracy making it unnecessary and too costly to develop more complex alternative scorecards (i.e. there is insufficient ROI to use more complex methods).

However, in recent years, general predictive modeling methods have become increasingly common, replacing the traditional logistic-regression based linear sum-of-scores scorecards.

Cox Proportional Hazard Models

First, a modification of the traditional approach that has become popular replaces for the modeling step the logistic regression model with the Cox Proportional Hazard Model. To summarize, the Cox model (for short) predicts the probability of failure, default, or termination of an outcome within a specific time interval. Details regarding the Cox model (and the proportionality-of-hazard assumption, and how to test it) can be found in Survival Analysis. However, effectively this method can be considered an alternative and refinement to logistic regression in particular when life-times for credit performance (until default, early pay-off, etc.) are available in the training data. The Cox model is still a linear model though (of the relative hazard rate), i.e. it is linear in the predictors, and the predictions are linear combinations of predictor values. Hence, the predictor pre-processing described above is still useful and applicable (e.g. coarse coding of predictors), as are the subsequent steps for model evaluation, cut-off selection, and so on.

Predictive Modeling Algorithms (e.g. Stochastic Gradient Boosting)

If the accuracy of the prediction of risk is the most important consideration of a scorecard building project (and is associated with most of the expected ROI resulting from the project), then predictive modeling methods and general approximators such as Stochastic Gradient Boosting provide better performance than linear models. The development of advanced data mining predictive modeling algorithms has basically been driven by the desire to detect complex high-order interactions, nonlinearities, discontinuities, and so on among the predictors and their relationships to the outcome of interest, in order to boost predictive accuracy .

Note that automated (computer-based) scoring engines can deliver near-instant feedback to credit applicants, thus negating the advantage of traditional scorecard building methods (as described above). Also, it is still possible to automatically perform analyses subsequent to the credit decision to determine what predictor variable(s) and value(s) influenced most the prediction of risk, and subsequent denial of credit (although those methods are less straightforward), and to provide that feedback to applicants (which is typically required by the laws governing the credit business).

The actual process of building scorecard models using data mining algorithms such as stochastic gradient boosting usually turns out to be simpler than traditional techniques. Since most algorithms are general approximators capable of representing any relationship between predictors and outcomes, and are also relatively robust to outliers. it is not necessary to perform many of the predictor preparation steps such as coarse-coding, etc. All steps subsequent to model building still apply, except that instead of evaluating models and identifying cutoff values based on (sum) scores, the graphs and tables that are typically made to support those analyses can be created based on prediction probabilities from the respective data mining predictive model (or ensemble of models).

Likewise, most of the typical steps after implementation (into production) of the scorecard also still apply and are necessary to evaluate the performance of the scoring system (as well as population stability, delinquency rates, and accuracy).

Business Objectives

The application of scoring models in today’s business environment covers a wide range of objectives. The original task of estimating the risk of default has been augmented by credit scoring models to include other aspects of credit risk management: at the pre-application stage (identification of potential applicants), at the application stage (identification of acceptable applicants), and at the performance stage (identification of possible behavior of current customers). Scoring models with different objectives have been developed. They can be generalized into four categories as listed below.

1. Marketing Aspect

Purposes

Response scoring: The scoring models that estimate how likely a consumer would respond to a direct mailing of a new product.

Retention/attrition scoring: The scoring models that predict how likely a consumer would keep using the product or change to another lender after the introductory offer period is over.

2. Application Aspect

Applicant scoring: The scoring models that estimate how likely a new applicant of credit will become default.

3. Performance Aspect

4. Bad Debt Management

Select optimal collections policies in order to minimize the cost of administering collections or maximizing the amount recovered from a delinquent’s account.

Scoring models for collection decisions: Scoring models that determine when actions should be taken on the accounts of delinquents and which of several alternative collection techniques might be more appropriate and successful.

Thus, the overall objective of credit scoring is not only to determine whether the applicant is credit worthy, but also to attract quality credit applicants who can subsequently be retained and controlled while maintaining an overall profitable portfolio.