The Big Idea Before You Make That Big Decision…

Post on: 3 Апрель, 2015 No Comment

Reprint: R1106B

When an executive makes a big bet, he or she typically relies on the judgment of a team that has put together a proposal for a strategic course of action. After all, the team will have delved into the pros and cons much more deeply than the executive has time to do. The problem is, biases invariably creep into any teams reasoningand often dangerously distort its thinking. A team that has fallen in love with its recommendation, for instance, may subconsciously dismiss evidence that contradicts its theories, give far too much weight to one piece of data, or make faulty comparisons to another business case.

Thats why, with important decisions, executives need to conduct a careful review not only of the content of recommendations but of the recommendation process. To that end, the authorsKahneman, who won a Nobel Prize in economics for his work on cognitive biases; Lovallo of the University of Sydney; and Sibony of McKinseyhave put together a 12-question checklist intended to unearth and neutralize defects in teams thinking. These questions help leaders examine whether a team has explored alternatives appropriately, gathered all the right information, and used well-grounded numbers to support its case. They also highlight considerations such as whether the team might be unduly influenced by self-interest, overconfidence, or attachment to past decisions.

By using this practical tool, executives will build decision processes over time that reduce the effects of biases and upgrade the quality of decisions their organizations make. The payoffs can be significant: A recent McKinsey study of more than 1,000 business investments, for instance, showed that when companies worked to reduce the effects of bias, they raised their returns on investment by seven percentage points.

Executives need to realize that the judgment of even highly experienced, superbly competent managers can be fallible. A disciplined decision-making process, not individual genius, is the key to good strategy.

The Idea in Brief

When executives make big strategic bets, they typically depend on the judgment of their teams to a significant extent.

The people recommending a course of action will have delved more deeply into the proposal than the executive has time to do.

Inevitably, lapses in judgment creep into the recommending team’s decision-making process (because its members fell in love with a deal, say, or are making a faulty comparison to an earlier business case).

This article poses 12 questions that will help executives vet the quality of decisions and think through not just the content of the proposals they review but the biases that may have distorted the reasoning of the people who created them.

Thanks to a slew of popular new books, many executives today realize how biases can distort reasoning in business. Confirmation bias. for instance, leads people to ignore evidence that contradicts their preconceived notions. Anchoring causes them to weigh one piece of information too heavily in making decisions; loss aversion makes them too cautious. In our experience, however, awareness of the effects of biases has done little to improve the quality of business decisions at either the individual or the organizational level.

Though there may now be far more talk of biases among managers, talk alone will not eliminate them. But it is possible to take steps to counteract them. A recent McKinsey study of more than 1,000 major business investments showed that when organizations worked at reducing the effect of bias in their decision-making processes, they achieved returns up to seven percentage points higher. (For more on this study, see “The Case for Behavioral Strategy,” McKinsey Quarterly. March 2010.) Reducing bias makes a difference. In this article, we will describe a straightforward way to detect bias and minimize its effects in the most common kind of decision that executives make: reviewing a recommendation from someone else and determining whether to accept it, reject it, or pass it on to the next level.

The Behavioral Economics of Decision Making

Daniel Kahneman (the lead author) and Amos Tversky introduced the idea of cognitive biases, and their impact on decision making, in 1974. Their research and ideas were recognized when Kahneman was awarded a Nobel Prize in economics in 2002. These biases, and behavioral psychology generally, have since captured the imagination of business experts. Below are some notable popular books on this topic:

Nudge: Improving Decisions About Health, Wealth, and Happiness by Richard H. Thaler and Cass R. Sunstein (Caravan, 2008)

Think Twice: Harnessing the Power of Counterintuition by Michael J. Mauboussin (Harvard Business Review Press, 2009)

Think Again: Why Good Leaders Make Bad Decisions and How to Keep It from Happening to You by Sydney Finkelstein, Jo Whitehead, and Andrew Campbell (Harvard Business Review Press, 2009)

Predictably Irrational: The Hidden Forces That Shape Our Decisions by Dan Ariely (HarperCollins, 2008)

Thinking, Fast and Slow by Daniel Kahneman (Farrar, Straus and Giroux, forthcoming in 2011)

For most executives, these reviews seem simple enough. First, they need to quickly grasp the relevant facts (getting them from people who know more about the details than they do). Second, they need to figure out if the people making the recommendation are intentionally clouding the facts in some way. And finally, they need to apply their own experience, knowledge, and reasoning to decide whether the recommendation is right.

However, this process is fraught at every stage with the potential for distortions in judgment that result from cognitive biases. Executives can’t do much about their own biases, as we shall see. But given the proper tools, they can recognize and neutralize those of their teams. Over time, by using these tools, they will build decision processes that reduce the effect of biases in their organizations. And in doing so, they’ll help upgrade the quality of decisions their organizations make.

The Challenge of Avoiding Bias

Let’s delve first into the question of why people are incapable of recognizing their own biases.

According to cognitive scientists, there are two modes of thinking, intuitive and reflective. (In recent decades a lot of psychological research has focused on distinctions between them. Richard Thaler and Cass Sunstein popularized it in their book, Nudge .) In intuitive, or System One, thinking, impressions, associations, feelings, intentions, and preparations for action flow effortlessly. System One produces a constant representation of the world around us and allows us to do things like walk, avoid obstacles, and contemplate something else all at the same time. We’re usually in this mode when we brush our teeth, banter with friends, or play tennis. We’re not consciously focusing on how to do those things; we just do them.

In contrast reflective, or System Two, thinking is slow, effortful, and deliberate. This mode is at work when we complete a tax form or learn to drive. Both modes are continuously active, but System Two is typically just monitoring things. It’s mobilized when the stakes are high, when we detect an obvious error, or when rule-based reasoning is required. But most of the time, System One determines our thoughts.

Our visual system and associative memory (both important aspects of System One) are designed to produce a single coherent interpretation of what is going on around us. That sense making is highly sensitive to context. Consider the word “bank.” For most people reading HBR, it would signify a financial institution. But if the same readers encountered this word in Field & Stream. they would probably understand it differently. Context is complicated: In addition to visual cues, memories, and associations, it comprises goals, anxieties, and other inputs. As System One makes sense of those inputs and develops a narrative, it suppresses alternative stories.

Because System One is so good at making up contextual stories and we’re not aware of its operations, it can lead us astray. The stories it creates are generally accurate, but there are exceptions. Cognitive biases are one major, well-documented example. An insidious feature of cognitive failures is that we have no way of knowing that they’re happening: We almost never catch ourselves in the act of making intuitive errors. Experience doesn’t help us recognize them. (By contrast, if we tackle a difficult problem using System Two thinking and fail to solve it, we’re uncomfortably aware of that fact.)

This inability to sense that we’ve made a mistake is the key to understanding why we generally accept our intuitive, effortless thinking at face value. It also explains why, even when we become aware of the existence of biases, we’re not excited about eliminating them in ourselves. After all, it’s difficult for us to fix errors we can’t see.

By extension, this also explains why the management experts writing about cognitive biases have not provided much practical help. Their overarching theme is “forewarned is forearmed.” But knowing you have biases is not enough to help you overcome them. You may accept that you have biases, but you cannot eliminate them in yourself.

There is reason for hope, however, when we move from the individual to the collective, from the decision maker to the decision-making process, and from the executive to the organization. As researchers have documented in the realm of operational management, the fact that individuals are not aware of their own biases does not mean that biases can’t be neutralized—or at least reduced—at the organizational level.

This is true because most decisions are influenced by many people, and because decision makers can turn their ability to spot biases in others’ thinking to their own advantage. We may not be able to control our own intuition, but we can apply rational thought to detect others’ faulty intuition and improve their judgment. (In other words, we can use our System Two thinking to spot System One errors in the recommendations given to us by others.)

This is precisely what executives are expected to do every time they review recommendations and make a final call. Often they apply a crude, unsystematic adjustment—such as adding a “safety margin” to a forecasted cost—to account for a perceived bias. For the most part, however, decision makers focus on content when they review and challenge recommendations. We propose adding a systematic review of the recommendation process, one aimed at identifying the biases that may have influenced the people putting forth proposals. The idea is to retrace their steps to determine where intuitive thinking may have steered them off-track.

In the following section, we’ll walk you through how to do a process review, drawing on the actual experiences of three corporate executives—Bob, Lisa, and Devesh (not their real names)—who were asked to consider very different kinds of proposals:

Three Executives Facing Very Different Decisions

Bob, the vice president of sales in a business services company, has heard a proposal from his senior regional VP and several colleagues, recommending a radical overhaul of the company’s pricing structure.

Bob should encourage his sales team to evaluate other options, such as a targeted marketing program aimed at the customer segments in which the company has a competitive advantage.

Lisa is the chief financial officer of a capital-intensive manufacturing company. The VP of manufacturing in one of the corporation’s business units has proposed a substantial investment in one manufacturing site.

Lisa should have her team look at the proposed capacity improvement the way an incoming CEO might, asking: If I personally hadn’t decided to build this plant in the first place, would I invest in expanding it?

Devesh, the CEO of a diversified industrial company, has just heard his business development team propose a major acquisition that would complement the product line in one of the company’s core businesses.

Devesh should ask his M&A team to prepare a worst-case scenario that reflects the merger’s risks, such as the departure of key executives, technical problems with the acquisition’s products, and integration problems.

A radical pricing change.

Bob is the vice president of sales in a business services company. Recently, his senior regional VP and several colleagues recommended a total overhaul of the company’s pricing structure. They argued that the company had lost a number of bids to competitors, as well as some of its best salespeople, because of unsustainable price levels. But making the wrong move could be very costly and perhaps even trigger a price war.

A large capital outlay.

Lisa is the chief financial officer of a capital-intensive manufacturing company. The VP of manufacturing in one of the corporation’s business units has proposed a substantial investment in one manufacturing site. The request has all the usual components—a revenue forecast, an analysis of return on investment under various scenarios, and so on. But the investment would be a very large one—in a business that has been losing money for some time.

A major acquisition.

Devesh is the CEO of a diversified industrial company. His business development team has proposed purchasing a firm whose offerings would complement the product line in one of the company’s core businesses. However, the potential deal comes on the heels of several successful but expensive takeovers, and the company’s financial structure is stretched.

While we are intentionally describing this review from the perspective of the individual decision makers, organizations can also take steps to embed some of these practices in their broader decision-making processes. (For the best ways to approach that, see the sidebar “Improving Decisions Throughout the Organization.”)

Improving Decisions Throughout the Organization

To critique recommendations effectively and in a sustainable way, you need to make quality control more than an individual effort.

Organizations pursue this objective in various ways, but good approaches have three principles in common. First, they adopt the right mind-set. The goal is not to create bureaucratic procedures or turn decision quality control into another element of “compliance” that can be delegated to a risk assessment unit. It’s to stimulate discussion and debate. To accomplish this, organizations must tolerate and even encourage disagreements (as long as they are based on facts and not personal).

Second, they rotate the people in charge, rather than rely on one executive to be the quality policeman. Many companies, at least in theory, expect a functional leader such as a CFO or a chief strategy officer to play the role of challenger. But an insider whose primary job is to critique others loses political capital quickly. The use of a quality checklist may reduce this downside, as the challenger will be seen as “only playing by the rules,” but high-quality debate is still unlikely.

Third, they inject a diversity of views and a mix of skills into the process. Some firms form ad hoc critique teams, asking outsiders or employees rotating in from other divisions to review plans. One company calls them “provocateurs” and makes playing this role a stage of leadership development. Another, as part of its strategic planning, systematically organizes critiques and brings in outside experts to do them. Both companies have explicitly thought about their decision processes, particularly those involving strategic plans, and invested effort in honing them. They have made their decision processes a source of competitive advantage.

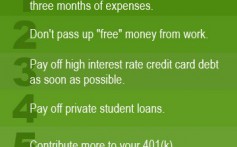

Decision Quality Control: A Checklist

To help executives vet decisions, we have developed a tool, based on a 12-question checklist, that is intended to unearth defects in thinking—in other words, the cognitive biases of the teams making recommendations. The questions fall into three categories: questions the decision makers should ask themselves, questions they should use to challenge the people proposing a course of action, and questions aimed at evaluating the proposal. It’s important to note that, because you can’t recognize your own biases, the individuals using this quality screen should be completely independent from the teams making the recommendations.