Stock Price Prediction Based on Procedural Neural Networks

Post on: 16 Март, 2015 No Comment

Stock Price Prediction Based on Procedural Neural Networks

Department of Electrical Engineering, Jiangnan University, Wuxi 214122, China

Received 11 January 2011; Revised 28 March 2011; Accepted 6 April 2011

Academic Editor: Songcan Chen

Stock Price Prediction Based on Procedural Neural Networks

Received 11 January 2011; Revised 28 March 2011; Accepted 6 April 2011

Academic Editor: Songcan Chen

Abstract

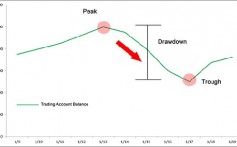

We present a spatiotemporal model, namely, procedural neural networks for stock price prediction. Compared with some successful traditional models on simulating stock market, such as BNN (backpropagation neural networks, HMM (hidden Markov model) and SVM (support vector machine)), the procedural neural network model processes both spacial and temporal information synchronously without slide time window, which is typically used in the well-known recurrent neural networks. Two different structures of procedural neural networks are constructed for modeling multidimensional time series problems. Learning algorithms for training the models and sustained improvement of learning are presented and discussed. Experiments on Yahoo stock market of the past decade years are implemented, and simulation results are compared by PNN, BNN, HMM, and SVM.

1. Introduction

From the beginning of time it has been humans common goal to make life easier and richer. The prevailing notion in society is that wealth brings comfort and luxury, so it is not surprising that there has been so much work done on ways to predict the markets. From the day stock was born, the movement of prediction has been the focus of interset for years since it can yield significant profits. There are several motivations for trying to predict stock market prices. The most basic of these is financial gain. Any system that can consistently pick winners and losers in the dynamic market place would make the owner of the system very wealthy. Thus, many individuals including researchers, investment professionals, and average investors are continually looking for this superior system which will yield them high returns. There is a second motivation in the research and financial communities. It has been proposed in the efficient market hypothesis (EMH) that markets are efficient in that opportunities for profit are discovered so quickly that they cease to be opportunities [1 ]. The EMH effectively states that no system can continually beat the market because if this system becomes public, everyone will use it, thus negating its potential gain.

It is a practically interesting and challenging topic to predict the trends of a stock price. Fundamental and technical analyses are the first two methods used to forecast stock prices. Various technical, fundamental, and statistical indicators have been proposed and used with varying results. However, no one technique or combination of techniques has been successful enough to consistently beat the market. With the development of neural networks, researchers and investors are hoping that the market mysteries can be unraveled. Although it is not an easy job due to its nonlinearity and uncertainty, many trials using various methods have been proposed, for example, artificial neural networks [2 ], fuzzy logic [3 ], evolutionary algorithms [4 ], statistic learning [5 ], Bayesian belief networks [6 ], hidden Markov model [7 ], granular computing [8 ], fractal geometry [9 ], and wavelet analysis [10 ].

Recently, a novel model named procedural neural networks (PNNs) was proposed to deal with spatiotemporal data modeling problems, especially for time series with huge data of multidimension [11 ]. Different from the traditional multilayer backpropagation neural network (BNNs), the data in PNN are accumulated along the time axis before or after combining the contribution of the space components. While collecting these data, different components do not have to be sampled simultaneously, but in the same intervals [12 ]. In this way, these time series problems subjected to synchronous sampling in all dimensions can be simulated by PNN. Moreover, the dimensional scale of input for PNN does not increase, while in the recurrent BNN a fix slide time window, which makes the dimensional scale large, is usually chosen to deal with time series data [2 ]. As a result, the complexity of PNNs is intuitively decreased both in the scale of model and in the time cost of training. Intrinsically, PNN differs from BNN in the way of mathematic mapping. BNN tries to map an 𝑛 -dimensional point to another point in an 𝑚 -dimensional space, while PNN tends to transfer an 𝑛 -dimensional function to an 𝑚 -dimensional point [13 ]. Varying from the previous work, this paper focuses on discussion of two kinds of quite different structures of PNNs and their application to prediction of stock market.

The rest of this paper is organized as follows. In Section 2. some general theories and analysis of stock markets are mentioned and some typical models are introduced for stock price prediction. In Section 3. the procedural neural network model is described in detail. In Section 4. the learning algorithm is constructed for training procedural neural networks, and the computational complexity of the algorithm is discussed. In Section 5. several experimental results are provided. Finally, Section 6 concludes this paper.

2. Typical Models for Stock Price Prediction

Stock markets are not perfect but pretty tough! Stock markets are filled with a certain energy and excitement. Such excitement and emotion is brought by the prospect of making a buck, or by buying and selling in the hope of getting rich. Many people buy and sell shares in a rush to make huge fortunes (usually huge losses). Only through knowing future information about a particular market that nobody else knows can you hope to be able to make a definite profit [8 ]. Research and the idea of stock market efficiency have been extensively studied in the past 40 years. Many of the reported anomalies could be the result of mismeasurements and the failure to incorporate time-varying risks and returns as well as the cost of information [14 ]. In todays information age, there seems to be too much information out there and many opportunities to get overloaded or just plainly confused. The key is to decide when certain ideas are valid in which context and also to decide on what you believe. The same goes when it comes to investing on shares.

With the development of stock chart software, you may backtest stock trading strategies, create stock trading systems, view, buy, and sell signals on the charts, and do a lot more. Todays charting packages have up to hundreds of predefined indices for you to define your own in analyzing stock markets [1 ]. Given the number of possible choices, which indexes do you use? Your choice depends on what you are attempting to model. If you are after daily changes in the stock market, then use daily figures of the all-ordinaries index. If you are after long-term trends, then use long-term indexes like the ten-year bond yield. Even if you use an indicator that is a good representation of the total market, it is still no guarantee of producing a successful result. In summary, the success depends heavily on the tool, or the model that you use [8 ].

2.1. Statistic Methods: Hidden Markov Model and Bayes Networks

Markov models [15. 16 ] are widely used to model sequential processes and have achieved many practical successes in areas such as web log mining, computational biology, speech recognition, natural language processing, robotics, and fault diagnosis. The first-order Markov model contains a single variable, the state, and specifies the probability of each state and of transiting from one state to another. Hidden Markov models (HMMs) [17 ] contain two variables, that is, the (hidden) state and the observation. In addition to the transition probabilities, HMMs specify the probability of making each observation in each state. Because the number of parameters of a first-order Markov model is quadratic in the number of states (and higher for higher-order models), learning Markov models is feasible only in relatively small state spaces. Such requirement makes them unsuitable for many data mining applications, which are concerned with very large state spaces.

Dynamic Bayesian networks (DBNs) generalize Markov models by allowing states to have an internal structure [18 ]. In a DBN, a state is represented by a set of variables, which can depend on each other and on variables in previous states. If the dependency structure is sufficiently sparse, it is possible to successfully learn and reason in much larger state spaces than using Markov models. However, DBNs are still restricted by the assumption that all states are described by the same variables with the same dependencies. To many applications, states naturally fall into different classes and each class is described by a different set of variables.

Recently, there has been a considerable interest in the applications of regime switching models driven by a hidden Markov chain to various financial problems. For an overview of the hidden Markov chain and its financial applications, see the work of Elliott et al. in [19 ], of Elliott and Kopp [20 ], and of Aggoun and Elliott in [21 ]. Some works on the use of the hidden Markov chain in finance include Buffington and Elliott [22. 23 ] for pricing European and American options, of Ghezzi and Piccardi for stock valuation [24 ], and of Elliott et al. [25 ] for option valuation in an incomplete market. Most of the literature concerns the pricing of options under a continuous-time Markov-modulated process, while Hassan et al. [26 ] propose and implement a fusion model by combining the hidden Markov Model (HMM), artificial neural networks (ANNs) and Genetic Algorithms (GAs) to forecast financial market behavior.

2.2. Fractal and Dynamic Methods: Fractal Geometric Chaos

The chaos theory [27 ] assumes that the return dynamics are not normally distributed and more complex approaches have to be used to study these time series. In fact, the Fractal Market Hypothesis assumes that the return dynamics are not dependent of the investors attitudes and represent the result of the interaction of traders who, frequently, adopt different investment styles. The studies proposed in literature to analyze and predict stock price dynamics assume that, by looking at the past, one may collect useful information to understand the price formation mechanism. The initial approaches proposed in literature, the so-called technical analysis, assume that the price dynamics could be approximated with linear trends and could be analyzed using a standard mathematical or graphical approach [28 ]. The high number of factors that are likely to influence the stock market dynamics makes this assumption incorrect and calls for the definition of more complex approaches that may succeed in studying these multiple relationships [29 ].

The nonlinear models are a heterogeneous set of econometric approaches that allow higher predictability levels, but not all the approaches may be easily applied to real data [30 ]. Deterministic chaos represents the best trade-off to establish fixed rules in order to link future dynamics to past results of a time series without imposing excessively simple assumptions [31 ]. In essence, chaos is a nonlinear deterministic process that looks random [32 ] because it is the result of an irregular oscillatory process influenced by an initial condition and characterized by an irregular periodicity [33 ]. The chaos theory assumes that complex dynamics may be explained if they are considered as a combination of more simple trends that are easy to understand [34 ]: the higher the number of breakdowns, the higher the probability of identifying a few previously known basic profiles [35 ]. Chaotic trends may be studied considering some significant points that represent attractors or deflectors for the time series being analyzed and the periodicity that exists in the relevant data. To improve the prediction accuracy of complex multivariate chaotic time series, recently, a scheme has been proposed based on multivariate local polynomial fitting with the optimal kernel function [36 ], which combines the advantages of traditional local, weighted, multivariate prediction methods.

2.3. Soft Computing: Neural Networks, Fuzzy Logic, and Genetic Algorithms

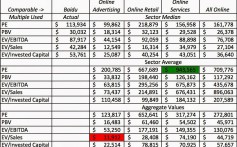

Apparently, White (1988) was the first to use backpropagation neural networks (BNNs) for market forecasting [1 ]. He was curious about whether BNNs could be used to extract nonlinear regularities from economic time series and thereby decode previously undetected regularities in asset price movements, such as fluctuations of common stock prices. White found that his training results were overoptimistic, being the result of overfitting or of learning evanescent features. Since then, it has been well established that fusing the soft computing (SC) technologies, for example, BNN, fuzzy logic (FL), and genetic algorithms (GAs), may significantly improve the analysis (Jain and Martin 1999 [37 ], Abraham et al. 2001 [38 ]). There are two main reasons for this. First, these technologies are mostly complementary and synergistic. They are complementary which follows from the observations that BNNs used for learning and curve fitting, FL is used to deal with imprecision and uncertainty, and GAs [39 ] are used for search and optimization. Second, as Zadeh (1992) [40 ] pointed out, merging these technologies allows for the exploitation of a tolerance for imprecision, uncertainty, and partial truth to achieve tractability, robustness, and low solution cost. Market forecasting and trading rules have numerous facets with potential applications for hybrids of the SC technologies. Given this potential and the impetus on SC during the last decade, it is not surprising that a number of SC studies have focused on market forecasting and trading applications. As an example, Kuo et al. [3 ] use a genetic-algorithm-based fuzzy neural network to measure the qualitative effects on the stock price. A recent work introduced the generalized regression neural network (GRNN) which is used in various prediction and forecasting tasks [41 ]. Due to robustness and flexibility of modeling algorithms, neurocomputational models are expected to outperform traditional statistical techniques such as regression and ARIMA in forecasting stock exchange price movements.

2.4. Machine Learning

Applications of machine learning (ML) to stock market analysis include portfolio optimization, investment strategy determination, and market risk Analysis. Duerson et al. [42 ] focus on the problem of investment strategy determination through the use of reinforcement learning techniques. Four techniques, two based on recurrent reinforcement learning (RLL) and two based on Q-learning, were utilized. Q-learning produced results that consistently beat buy- and- hold strategies on several technology stocks, whereas the RRL methods were often inconsistent and required further investigation. The behavior of recurrent reinforcement learner needs further analysis. The technical justification seems to be most rigorous in the literature for this method it seems to deceive that simpler methods produced more consistent results. It has been observed that the performance of turning point indicator is generally better during short runs of trading which shows the validity of charting analysis techniques as used by professional stock traders.

Support vector machine (SVM) is a very specific learning algorithm characterized by the capacity control of the decision function, the use of the kernel functions, and the sparsity of the solution. Huang et al. [43 ] investigate the predictability of financial movement direction with SVM by forecasting the week movement direction of NIKKEI 225 index. SVM is a promising tool for financial forecasting. As demonstrated in their empirical analysis, SVM seems to be superior to other individual classification methods in forecasting weekly movement direction. This is a clear message for financial forecasters and traders, which can lead to a capital gain. However, it has been known that each method has its own strengths and weaknesses. The weakness of one method can be balanced by combining the strengths of another by achieving a systematic effect. The combining model performs best among all the forecasting methods.

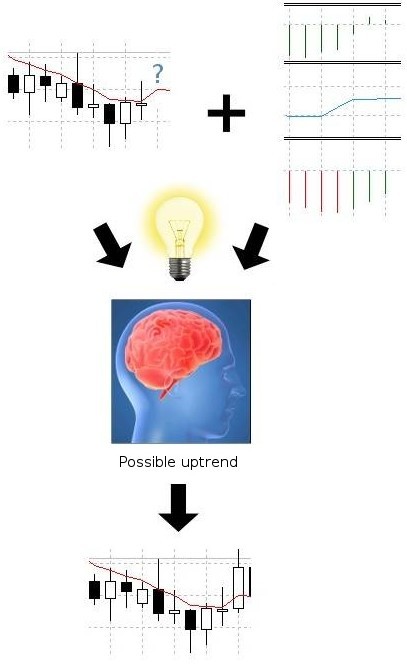

For time series predictions, SVM is utilized as a regression function. But while preparing samples for SVM, all functions, which are dispersed in a certain interval of time, have to be transferred to spacial vectors. So it is essential that SVM still perform functions that map static vectors from one space to another. PNN combines the spatial and temporal information together; namely, neurons process information both from space and time simultaneously. In [44 ], the author proposed an extended model, named support function machine (SFM), in which each component of the vector is a time function and applied to predict stock price.

3. Procedural Neural Network Models

3.1. Procedural Neuron Model

The invention of procedural neuron provides an alternative modeling strategy to simulate time series problems which are related to some procedures [11 ]. This model also offers an approach to study dynamic characteristics in classification or regression problems with a great deal of spatiotemporal data. The procedural neuron differs from the traditional artificial neuron it combines the spacial and temporal information together. In this way, neurons are endowed with spacial and temporal characteristics simultaneously. The weights, which connect neurons, are usually variable, that is, functions of time. The neurons are expected to be timeaccumulating, which will not be inspired before a period of time long enough by input accumulation. Compared with traditional artificial neurons, the procedural neurons can simulate the biology neurons physiologically better. Moreover, many problems in real life can be reduced to a procedure, for example, agricultural planting, industrial producing, and chemical reacting. However, mostly it is impracticable to stimulate such procedures in the traditional ways by constructing some mathematical or physical equations.

A typical procedural neuron can accept a series of inputs with multiple-dimensions, and there is only one corresponding output. In a procedural neuron, the aggregation operation is involved with not only the assembly of multi-inputs in space, but also the accumulation in time domain. So the procedural neuron is the extension of the time region from the traditional neuron. The traditional neuron can be regarded as a special case of the procedure neuron. In the structure of a procedural neuron, the continuity of time is shown in Figure 1. in which, 𝑋 ( 𝑡 ) = [ 𝑥 1 ( 𝑡 ) , 𝑥 2 ( 𝑡 ) , , 𝑥 𝑛 ( 𝑡 ) ] 𝑇   is the input function vector of the procedure neuron, 𝑊 ( 𝑡 ) = [ 𝑤 1 ( 𝑡 ) , 𝑤 2 ( 𝑡 ) , , 𝑤 𝑛 ( 𝑡 ) ] 𝑇   is the weight function (or weight function vector) in range [ 𝑡 1 , 𝑡 2 ]. and 𝑓   is the activation function, such as linear function, and Sigmoid-Gaussian function. There are two forms of accumulation, time first (the left in Figure 1 ) and space first (the right in Figure 1 ).

Mathematically, the static output of the procedural neuron in Figure 1 can be written as follows:   𝑦 = 𝑓 𝑡 2 𝑡 1 𝑊 ( 𝑡 ) 𝑇 𝑋  ( 𝑡 ) d 𝑡 . ( 1 ) In detail, the corresponding component form of (1 ) is   𝑦 = 𝑓 𝑡 2 𝑡 1 𝑛  𝑖 = 1 𝑤 𝑖 ( 𝑡 ) 𝑥 𝑖   ( 𝑡 ) d 𝑡 = 𝑓 𝑛  𝑖 = 1  𝑡 2 𝑡 1 𝑤 𝑖 ( 𝑡 ) 𝑥 𝑖  ( 𝑡 ) d 𝑡 . ( 2 )

The procedural neuron in Figure 1 is valuable in a sense only in theory, because most neural networks are constructed to solve discrete problems. In the case of discrete time, the procedural neuron takes the form as in Figure 2 or Figure 3. in which input data has been sampled along time axis and appears as a matrix { 𝑥 𝑖 𝑗 } 𝑛 𝑇 .