Innovation Risk How to Make Smarter Decisions

Post on: 23 Май, 2015 No Comment

Reprint: R1304B

New products and services are created to enable people to do tasks better than they previously could or do things they couldnt before. But innovations also carry risks. Just how risky an innovation turns out to be depends in great measure on the choices people make in using it.

Attempts to gauge the riskiness of an innovation must take into account the limitations of the modelsformal and informalon which people base their decisions about how to use the innovation, warns Robert C. Merton, MIT professor and Nobel laureate in economics. Some models turn out to be fundamentally flawed and should be jettisoned, he argues, while others are merely incomplete and can be improved upon. Some models require sophisticated users to produce good results; others are suitable only to certain applications.

And even when people employ appropriate models to make choices about how to use an innovationstriking the right balance between risk and performanceexperience shows that it is almost impossible to predict how their changed behavior will influence the riskiness of other choices and behaviors they make, often in apparently unrelated domains. Its the old story of unintended consequences. The more complex the system an innovation enters, the more likely and severe its unintended consequences will be. Indeed, many of the risks associated with an innovation stem not from the innovation itself but from the infrastructure into which it is introduced.

In the end, any innovation involves a leap into the unknowable. If we are to make progress, however, thats a fact we need to accept and to manage.

New products and services are created to enable people to do tasks better than they previously could, or to do things that they couldn’t before. But innovations also carry risks. Just how risky an innovation proves to be depends in great measure on the choices people make in using it.

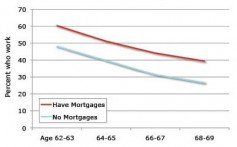

Ask yourself this: If you had to drive from Boston to New York in a snowstorm, would you feel safer in a car with four-wheel drive or two-wheel drive? Chances are, you’d choose four-wheel drive. But if you were to look at accident statistics, you’d find that the advent of four-wheel drive hasn’t done much to lower the rate of passenger accidents per passenger mile on snowy days. That might lead you to conclude that the innovation hasn’t made driving in the snow any safer.

Of course, what has happened is not that the innovation has failed to make us safer but that people have changed their driving habits because they feel safer. More people are venturing out in the snow than used to be the case, and they are probably driving less carefully as well. If you and everyone else were to drive to New York at the same speed and in the same numbers as you did before, four-wheel drive would indeed make you a lot safer. But if you and everyone else were to drive a lot faster, you’d face the same amount of risk you’ve always had in a snowstorm. In essence, you’re making a choice (consciously or unconsciously) between lowering your risk and improving your performance.

If the riskiness of an innovation depends on the choices people make, it follows that the more informed and conscious their choices are, the lower the risk will be. But as companies and policy makers think through the consequences of an innovation—how it will change the trade-offs people make and their behavior—they must be mindful of the limitations of the models on which people base their decisions about how to use the innovation. As we’ll see, some models turn out to be fundamentally flawed and should be jettisoned, while others can be improved upon. Some models are suited only to certain applications; some require sophisticated users to produce good results. And even when people use appropriate models to make choices about how to use an innovation—striking the right balance between risk and performance—experience shows us that it is almost impossible to predict how their changed behavior will influence the riskiness of other choices and behaviors they or others make, often in apparently unrelated domains. It’s the old story of unintended consequences. The more complex the system an innovation enters, the more likely and severe those consequences will be. Indeed, many of the risks associated with an innovation stem not from the innovation itself but from the infrastructure into which it is introduced.

The bottom line is that all innovations change the trade-off between risk and return. To minimize risk and unintended consequences, users, companies, and policy makers alike need to understand how to make informed choices when it comes to new products and services. In particular, they should respect five rules of thumb.

Recognize That You Need a Model

When you adopt a new product or technology, your decision about risk and return is informed by what cognitive scientists call a mental model. In the case of driving to New York in the snow, you might think, I can’t control all the risks associated with making the trip, but I can choose the type of car I drive and the speed at which I drive it. A simple mental model for assessing trade-offs between risk and performance, therefore, might be represented by a graph that plots safety against type of car and speed.

More and more activities that traditionally required human cognition have proved susceptible to formal mathematical modeling.

Of course, this model is a gross simplification. The relationship between safety and speed will depend on other variables—the weather and road conditions, the volume of traffic, the speed of other cars on the road—many of which are out of your control. To make the right choices, you have to understand precisely the relationship among all these variables and your choice of speed. Of course, the more factors you incorporate, the more complicated it becomes to assess the risks associated with a given speed. To make an accurate assessment, you’d need to compile data, estimate parameters for all the factors, and determine how those factors might interact.

Historically, most models that people actually have applied to real-life situations have existed semiconsciously in people’s minds. Even today, when driving a car we reflexively draw on imprecise but robust mental models where relationships between factors are guessed at based on experience. But with the advent of computer technology, more and more activities that traditionally required human cognition have proved susceptible to formal mathematical modeling. When you cross the Atlantic on a commercial aircraft, for example, your plane will for the most part be flown by a computer, whose “decisions” about speed, altitude, and course are based on mathematical models that process continual input about location, air pressure, aircraft weight, the location of air traffic, wind speed, and a host of other factors. Computer pilots are now so sophisticated that they can even land a plane.

As with aircraft, so too with finance: The Black-Scholes formula for valuing stock options, which I helped develop back in the 1970s, attempts to establish the extent to which measurable or observable external factors—specifically, the price of the underlying asset, the volatility of that price, interest rates, and time to expiration—might relate to the price of an option to buy that particular asset. Financial firms use models like Black-Scholes to allow computers to conduct trades. You could, for example, program a computer to place an order to buy or sell a stock or an option if the program observed from market data that actual stock and option prices were deviating from valuations generated by Black-Scholes or some other rigorous valuation model.

No human being can possibly foresee all the consequences of an innovation, no matter how obvious they may seem in hindsight.

It seems reasonable, then, to suppose that the more factors your model incorporates, the better your assessment will be of the risks you incur in deciding whether and how to adopt a particular innovation. That explains to a great extent the popularity of mathematical modeling, especially with respect to technological and financial innovations. And many of these models do a pretty good job. The general replication methodology at the heart of Black-Scholes, for example, has been well substantiated by empirical evidence: Actual option and other derivative values do seem to correspond to those predicted by even simplified versions of the model. But it is precisely when you start to feel comfortable in your assessments that you need to really watch out.

Acknowledge Your Model’s Limitations

In building and using models—whether a financial pricing model or an aircraft’s autopilot function—it is critical to understand the difference between an incorrect model and an incomplete one.

An incorrect model is one whose internal logic or underlying assumptions are themselves manifestly wrong—for instance, a mathematical model for calculating the circumference of a circle that uses a value of 4.14 for pi. This is not to say, of course, that incorrectness is always easy to spot. An aircraft-navigation model that places New York’s La Guardia airport in Boston, for example, might not be recognized as flawed unless the planes it guided tried to fly to that airport. Once a model is found to be based on a fundamentally wrong assumption, the only proper thing to do is to stop using it.

Incompleteness is a very different problem and is a quality shared by all models. The Austrian American mathematician Kurt Gdel proved that no model is “true” in the sense that it is a complete representation of reality. As a model for pi, 3.14 is not wrong, but it is incomplete. A model of 3.14159 is less incomplete. Note that the less-incomplete model improves upon the base version rather than replacing it altogether. The basic model does not need to be unlearned but instead added to.

The distinction between incorrectness and incompleteness is an important one for scientists. As they develop models that describe our world and allow us to make predictions, they reject and stop using those that they find to be incorrect, whether through formal analysis of their workings or through testing of underlying assumptions. Those that survive are regarded as incomplete, rather than wrong, and therefore improvable. Consider again Black-Scholes. A growing arsenal of option models has emerged that extend the same underlying methodology beyond the basic formula, incorporating more variables and more-robust assumptions for specialized applications.

In general, until some fundamental violation of math in a model is detected or some error in the assumptions currently being fed into it is unearthed, the logical course is to refine rather than reject it. This is much easier said than done, however, which brings us to the next challenge.

Expect the Unexpected

Even with the best effort and ingenuity, some factors that could go into a model will be overlooked. No human being can possibly foresee all the consequences of an innovation, no matter how obvious they may seem in hindsight. This is particularly the case when an innovation interacts with other changes in the environment that in and of themselves are unrelated and thus not recognized as risk factors.

The 2007–2009 financial crisis provides a good example of such unintended consequences. Innovations in the real estate mortgage market that significantly lowered transaction costs made it easy for people not only to buy houses but also to refinance or increase their mortgages. People could readily replace equity in their property with debt, freeing up money to buy cars, vacations, and other desirable goods and services. There’s nothing inherently wrong in doing this, of course; it’s a matter of personal choice.

The intended (and good) consequence of the mortgage-lending innovations was to increase the availability of this low-cost choice. But there was also an unintended consequence: Because two other, individually benign economic trends—declining interest rates and steadily rising house prices—coincided with the changes in lending, an unusually large number of homeowners were motivated to refinance their mortgages at the same time, extracting equity from their houses and replacing it with low-interest, long-term debt.

The trend was self-reinforcing—rising house prices increased homeowner equity, which could then be extracted and used for consumption—and mortgage holders began to repeat the process over and over. As the trend continued, homeowners came to view these extractions as a regular source of financing for ongoing consumption, rather than as an occasional means of financing a particular purchase or investment. The result was that over time the leverage of homeowners of all vintages began to creep up, often to levels as high as those of new purchasers, instead of declining, as it normally would when house prices are on the rise.

Absent any one of the three conditions (an efficient mortgage refinancing market, low interest rates, and, especially, consistently rising house prices), it is unlikely that such a coordinated releveraging would have occurred. But because of the convergence of the three conditions, homeowners in the United States refinanced on an enormous scale for most of the decade preceding the financial crisis. The result was that many of them faced the same exposure to the risk of a decline in house prices at the same time—creating a systemic risk.

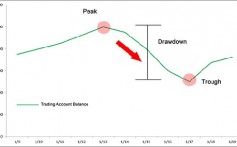

Compounding that risk was an asymmetry in the ability of homeowners to build risk up versus take it down again. When house prices are rising, it is easy to borrow money in increments and to secure those increments against increased house value. But if the trend reverses and home prices decline, homeowners’ leverage and risk increase while their equity shrinks with the drop in value. If a homeowner recognizes this and wants to rebalance to a more acceptable risk level, he discovers the asymmetry: There is no practical way to reduce his borrowings incrementally. He has to sell his whole house or do nothing—he can’t sell part of it. (For more on asymmetry in risk adjustment, see “Systemic Risk and the Refinancing Ratchet Effect,” by Amir Khandani, Andrew W. Lo, and Robert C. Merton, forthcoming, Journal of Financial Economics. ) Because of this fundamental indivisibility, homeowners often choose to take no action in the hope that the decline of prices will reverse or at least stop. But if it continues, people eventually feel sufficiently financially squeezed that they may be forced to sell their houses. That can put a lot of houses on the market at once, which is hardly good for the hoped-for reversal in the price trend. Under these conditions, the mortgage market can be particularly vulnerable to even a modest dip in house prices and rise in interest rates. That scenario is exactly what took place during the recent financial crisis.

Systemic Risk: When Three Rights Make a Wrong

Popular explanations for the 2007–2009 financial crisis focus on “fools” (those who should have seen the danger signs but didn’t) and “knaves” (those who did and chose to exploit them). Both surely contributed materially to the crisis. However, the crisis was also driven by structural interactions in the system that did not involve the irrational, uninformed, or unethical behavior of individuals.

Once you factor in the systemic exposure of U.S. homeowners, the losses in asset values predicted by existing financial models correspond closely to the losses actually realized. (See “Systemic Risk and the Refinancing Ratchet Effect,” by Amir Khandani, Andrew W. Lo, and Robert C. Merton, Journal of Financial Economics. ) While this does not prove that the exposure in the 2007–2009 crisis was the result of low interest rates, easy refinancing, and steadily rising real estate prices, it does suggest that such structural effects may have been important factors—and that they could be the prime source of future crises. It also suggests that existing financial models do not necessarily deserve the obloquy that some have heaped upon them.

It also follows that you do not need to impute dubious motives and behavior on the part of financial professionals to explain the crisis. Although such dysfunctional behavior certainly existed and played a role, the market’s general failure to predict the crisis was more likely a consequence of the incompleteness of the models—they did not take into account homeowner exposure, for instance—rather than their being fundamentally flawed and their users corrupt.

To be sure, identifying and removing fools and knaves is always a good policy, but one should be aware that their removal is not nearly enough to ensure financial stability and avoid crisis.

Let me reiterate that the three factors involved in creating the risk—efficient refinancing opportunities, falling interest rates, and rising house prices—were individually benign. It is difficult to imagine that any regulatory agency would raise a red flag about any one of these conditions. For example, in response to the bursting of the tech bubble in 2000, the shock of 9/11, and the threat of recession, the U.S. Federal Reserve systematically lowered its bellwether interest rate—the Fed funds rate—from 6.5% in May 2000 to 1% in June 2003, which stimulated mortgage refinancing and the channels for doing so. As was the case through 2007, lower interest rates and new mortgage products allowed more households to purchase homes that were previously unaffordable; rising home prices generated handsome wealth gains for those households; and more-efficient refinancing opportunities allowed households to realize their gains, fueling consumer demand and general economic growth. What politician or regulator would seek to interrupt such a seemingly virtuous cycle?

Understand the Use and the User

Let’s assume that you have built a model that is fundamentally correct: that is, it does not defy the laws of nature or no-arbitrage, nor does it contain manifestly flawed assumptions. Let’s also assume that it is more complete than other existing models. There is still no guarantee that it will work well for you. A model’s utility depends not just on the model itself but on who is using it and what they are using it for.

Let’s take the issue of application first. To put it simply, you wouldn’t choose a Ferrari for off-road travel any more than you would use a Land Rover to cut a dash on an Italian autostrada. Similarly, the Black-Scholes formula does not give option-value estimates accurate enough to be useful in ultra-high-speed options trading, an activity that requires real-time price data. By the same token, the models used for high-speed trading are useless in the corporate reporting of executive stock options’ expense value in accordance with generally accepted accounting principles. In that context, it’s important that the workings of the model are transparent, it can be consistently applied across firms, and the reported results can be reproduced and verified by others. Here, the classic Black-Scholes formula provides the necessary standardization and reproducibility, because it requires a limited number of inputting variables whose estimated values are a matter of public record.

A model is also unreliable if the person using it doesn’t understand it or its limitations. For most high-school students, a reasonable model for estimating the circumference of a circle is one that assumes a value of 22/7 for pi. This will give results good to a couple of decimal points, which will usually be sufficient for high-school-level work. Offering the students a much more complicated model would be rather like giving them that Ferrari to drive. The chances are high that they’ll crash it, and they don’t need to get to school that fast.

When you think about who uses models and for what, you often must rethink what qualifies people for a particular job. For many, the hero of the movie Top Gun, played by Tom Cruise, exemplifies the ideal fighter pilot: a daring rule breaker who flies by instinct and the seat of his pants rather than relying on instrumentation. Harrison Ford’s Han Solo from Star Wars fits the same mold. But today’s fighter planes are best run by computer programs that respond to external changes in the environment every millisecond, a rate no human could possibly match. Indeed, placing a zillion-dollar aeronautical computer system in the hands of a seat-of-pants maverick would be a rather risky business. A better pilot might be a computer geek who knows the model cold and is trained to quickly spot any signs that it is not working properly, in which case the best response would probably be to disengage rather than stay to fight.

A more-complete but more-complicated model may carry greater risks than a cruder one if the user is not qualified for the job.

The point is not to debate the relative merits of hotshots and computer geeks. Rather, it’s to demonstrate that models can be meaningfully evaluated only as a triplet: model, application, and user. A more-complete but more-complicated model may carry greater risks than a cruder one if the user is not qualified for the job. A case in point is the recent U.S. credit-rating crisis. It is arguably because so many investment managers misapplied a model that such huge losses on their portfolios of AAA-rated bonds were incurred, as the sidebar “Ratings: Not the Whole Picture” illustrates.

Ratings: Not the Whole Picture

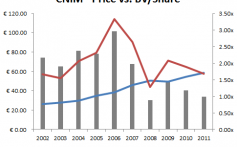

Among the biggest casualties of the 2008 U.S. credit rating crisis were the rating agencies themselves. They sustained significant damage to their credibility when many of the bonds to which they assigned AAA credit ratings ended up trading at deep discounts.

Investment managers who based their decisions on the ratings incurred huge losses. But was the rating model that the agencies used actually flawed? Historically, the model, which is based almost exclusively on estimates of the probability of an issuer’s defaulting, had worked pretty well, and the agencies—which arguably saw their role as simply evaluating the soundness of corporate and governmental financial practices—probably felt that it was adequate for their purpose.

You can, of course, debate whether the agencies’ limited view of their role was appropriate. You can certainly question the potential conflict of interest when issuers pay ratings agencies for consulting services and ratings. What is not debatable (certainly in hindsight) is that the rating model was not an adequate tool for managing a bond portfolio. That’s because probability of default is not the only factor determining the value of a bond and its risk. Other key factors include the likely amount of the investment that could be recovered in the event of default and the degree to which a borrower’s business prospects reflect the economic cycle.

The latter becomes particularly important in a time of crisis: If a bond defaults when the bondholder’s wealth is suffering for other reasons, it will have a worse impact on investors’ welfare than if the bond defaults when times are good. So common sense would say that an investor would pay less for a bond issued by a company in a procyclical business than for one issued by a company in a countercyclical business. (Finance theory says so as well.) Moreover, a bond with a low rate of investment recovery theoretically should trade at a discount to one with a high recovery rate and the same chance of default.

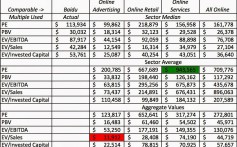

Those factors made no difference to the agencies, however, which based their ratings strictly on the probability of default. This meant that procyclical and countercyclical companies with the same probability of default got the same rating. Similarly, two bonds could get the same rating even though one was likely to give more back in the event of default than the other. As a result of these discrepancies, bonds with AAA ratings could and did trade at quite different prices in the bond market.

Now suppose that you’re an investment manager and your client wants her money invested in long-term bonds, rated AAA by Standard & Poor’s. As a conscientious manager, you would look for the cheapest AAA-rated bonds because these offer a better return for the same estimated risk. The trouble is that in doing so you would almost certainly create a portfolio heavily weighted in procyclical, low-recovery-rate bonds, whose values would deteriorate the most in an economic downturn, perhaps quite dramatically.

The credit-rating debacle is thus a good example of how adopting a model not fit for your purpose—in this case, using a model for predicting the likelihood of default rather than one for valuing bonds to manage a portfolio—can result in disastrous decisions. It should be noted that the investors who did use models built for bond valuations fared better than those relying principally on the ratings.

Check the Infrastructure

Finally, as we consider the consequences of an innovation, we need to recognize that its benefits and risks are in large measure determined not by the choices people make about how to use it but by the infrastructure into which it is introduced. Innovators and policy makers, in particular, must be mindful of this risk. Suppose, for instance, you want to introduce a high-speed passenger train to your railway network. If the tracks of the current system can’t handle high speeds and, either through ignorance or a high tolerance for risk, you choose to run the train at high speed, it will crash at some point and the passengers will pay a terrible price. What’s more, you’ll probably destroy the tracks, which means that everyone who uses the network will in some way be affected. People won’t be able get to work, hospitals won’t get their new equipment, and so forth.

So the first task of those in charge of the railway is to ensure that the track can safely support the trains running on it. But what are they to do about your high-speed train? The simplest and most immediate response is to impose a safe speed limit. But if that is the only response, then there can be no progress in rail transportation—why bother developing a high-speed train that you will never operate at high speed?

A better solution is to begin upgrading the track and, at the same time, set limits on speed until the technological imbalance between the product and its infrastructure is resolved. Unfortunately, simple answers like that are not always so easy to come by in the real world, because few major innovations are such obvious winners as a high-speed train (and I’m sure there are people who question that innovation as well). The pace of innovation in some industries is very high, but so is the rate of failure. It is often quite infeasible, therefore, to change the infrastructure to accommodate every innovation that comes along. What’s more, the shelf life of successful innovations can be much shorter than that of a high-speed train, which means that to keep up you would be submitting your infrastructure to constant change.

Even if you could make changes to an infrastructure to coincide with a product’s launch, you might find that within a short time those changes have become irrelevant.

The reality is that changes in infrastructure usually lag changes in products and services, and that imbalance can be a major source of risk. This is nothing new for the financial system. Consider the near collapse of the security-trade processing systems at many U.S. brokerage firms during the bull market of 1970. Order-processing technology at the time was not capable of handling the unprecedented volume of transactions flooding into brokerage firms’ back offices. The backlog meant that firms and their customers had incomplete, and in many cases inaccurate, information about their financial positions. This breakdown caused some firms to founder.

A temporary solution was achieved through cooperative action by the major stock exchanges. For a period of time, they restricted trading hours to allow firms to catch up on their order processing and account reconciliation. The underlying problem was solved only after the firms and exchanges made massive investments in new technology for data processing. In this particular case, the infrastructure problem was resolved without government intervention. It is unlikely, however, that such intervention could be avoided today if a security-transactions problem of similar magnitude were to arise. The number of competing financial intermediaries and exchanges (including derivative-security exchanges) around the globe would make it extraordinarily difficult for efforts at private voluntary coordination to succeed.

Complicating the risks from imbalance between product and service innovation and infrastructure innovation is the fact that products and services continue evolving after they are launched, and this evolution is not independent of the infrastructure. Suppose a bank or broker introduces a customized product into the financial markets. As demand increases, the product or service is soon standardized and begins to be provided directly to users through an exchange market mechanism at vastly reduced costs.

That’s what happened 50 years ago when mutual funds became popular. Before that innovation, the only way private individuals could create a diversified market portfolio was by buying a selection of shares on an exchange. This was expensive and infeasible for all but a handful of large investors—transaction costs were often very high, and the desired stocks were frequently not available in small enough lot sizes to accommodate full diversification. The innovation of pooling intermediaries such as mutual funds allowed individual investors to achieve significantly better-diversified portfolios. Subsequently, new innovations allowed futures contracts to be created on various stock indexes, both domestic and foreign. These exchange-traded contracts further reduced costs, improved domestic diversification, and provided expanded opportunities for international diversification. They gave the investor still greater flexibility in selecting leverage and controlling risk. In particular, index futures made feasible the creation of exchange-traded options on diversified portfolios. Most recently, intermediaries have begun to use equity return swaps to create custom contracts that specify the stock index, the investment time horizon, and even the currency mix for payments.

Thus, the institutional means of stock diversification for households was initially markets for individual company shares. Through innovation, intermediaries such as mutual funds replaced them. Then, with stock-index futures, investors could once again tap the markets directly. Now we are seeing innovation by intermediaries with exchange-traded funds (ETFs), which permit diversified portfolios to be traded on exchanges.

The risk of this kind of dynamic is, of course, that it becomes very difficult to identify at any given time exactly what changes in the infrastructure are needed. Even if you could make changes to an infrastructure to coincide with a new product’s launch, you might find that within a very short time those changes have become irrelevant because the product is now being sold by different people through different channels to different users who need it for different purposes. To complicate matters, infrastructural changes can generate their own unintended consequences. An adequate assessment of the risks involved with an innovation requires a careful modeling of consequences. But our ability to create models rich enough to capture all dimensions of risks is limited. Innovations are always likely to have unintended consequences, and models are by their very nature incomplete representations of complex reality. Models are also constrained by their users’ proficiency, and they can easily be misapplied. Finally, we must recognize that many of the risks of an innovation stem from the infrastructure that surrounds it. It’s particularly difficult to think through the infrastructural consequences of innovation in complex, fast-evolving industries such as finance and IT. In the end, any innovation involves a leap into the unknowable. If we are to make progress, however, that’s a fact we need to accept and to manage.