Data Mining For Investors_1

Post on: 21 Апрель, 2015 No Comment

Report by Sudeshna Basu, Georgia State University Fall 1997

Over the past decade or so, businesses have accumulated huge amounts of data in large databases. These stockpiles mainly contain customer data, but the data’s hidden value—the potential to predict business trends and customer behavior—has largely gone untapped.

To convert this potential value into strategic business information, many companies are turning to data mining, a growing technology based on a new generation of hardware and software. Data mining combines techniques including statistical analysis, visualization, decision trees, and neural networks to explore large amounts of data and discover relationships and patterns that shed light on business problems. In turn, companies can use these findings for more profitable, proactive decision making and competitive advantage. Although data mining tools have been around for many years, data mining became feasible in business only after advances in hardware and software technology came about.

Hardware advances—reduced storage costs and increased processor speed—paved the way for data mining’s large-scale, intensive analyses. Inexpensive storage also encouraged businesses to collect data at a high level of detail, consolidated into records at the customer level.

Software advances continued data mining’s evolution. With the advent of the data warehouse, companies could successfully analyze their massive databases as a coherent, standardized whole. To exploit these vast stores of data in the data warehouse, new exploratory and modeling tools—including data visualization and neural networks—were developed. Finally, data mining incorporated these tools into a systematic, iterative process.

SAS Institute understands the key issues and challenges facing businesses today—including the need to control costs, build up customer relationships, and create and sustain a competitive advantage.

SAS Institute defines data mining as the process of selecting, exploring, and modeling large amounts of data to uncover previously unknown patterns for a business advantage. As a sophisticated decision support tool, data mining is a natural outgrowth of a business’ investment in data warehousing. The data warehouse provides a stable, easily accessible repository of information to support dynamic business intelligence applications.

As the next step, organizations employ data mining to explore and model relationships in the large amounts of data in the data warehouse. Without the pool of validated and scrubbed data that a data warehouse provides, the data mining process requires considerable additional effort to pre-process data.

Although the data warehouse is an ideal source of data for data mining activities, the Internet can also serve as a data source. Companies can take data from the Internet, mine the data, and distribute the findings and models throughout the company via an Intranet.

There’s gold in your data, but you can’t see it. It may be as simple (and wealth-producing) as the realization that baby-food buyers are probably also diaper purchasers. It may be as profound as a new law of nature. But no human who’s looked at your data has seen this hidden gold. How can you find it?

Data mining lets the power of computers do the work of sifting through your vast data stores. Tireless and relentless searching can find the tiny nugget of gold in a mountain of data slag.

In The Data Gold Rush, Sara Reese Hedberg shows the already wide variety of uses for the relatively young practice of data mining. From analyzing customer purchases to analyzing Supreme Court decisions, from discovering patterns in health care to discovering galaxies, data mining has an enormous breadth of applications. Large corporations are rushing to realize the potential payoffs of data mining, both in the data itself and in marketing their proprietary tools.

In A Data Miner’s Tools, Karen Watterson explains the three categories of software to perform data mining. Query-and-reporting tools, in vastly simplified and easier-to-use forms, require close human direction and data laid out in databases or other special formats. Multidimensional analysis (MDA) tools demand less human guidance but still need data in special forms. Intelligent agents are virtually autonomous, are capable of making their own observations and conclusions, and can handle data as free-form as paragraphs of text.

Data Mining Dynamite by Cheryl D. Krivda shows how to facilitate the data-mining process. Data is handled far faster after it has been cleansed of unnecessary fields and stored in more convenient forms. Housing data in data warehouses reduces the load on production mainframes and supports client/server analysis. Parallel computing speeds the search process with multiple simultaneous queries. And any activity handling this volume of data requires consideration of physical storage options.

In the short term, the results of data mining will be in profitable if mundane business-related consequences. Micro-marketing campaigns will explore new niches. Advertising will target potential customers with new precision.

In the not-too-long term, data mining may become as common and easy to use as E-mail. We may direct our tools to find the best airfare to the Grand Canyon, root out a phone number for a long-lost classmate, or find the best prices on lawn mowers. The software will figure out where to look, how to evaluate what it finds, and when to quit. Our knowledge helpers may become as indispensable as the telephone.

But it’s the long-term prospects of data mining that are truly breathtaking. Imagine intelligent agents being turned loose on medical-research data or on subatomic-particle information. Computers may reveal new treatments for diseases or new insights into the nature of the universe. We may well see the day when the Nobel prize for a great discovery is awarded to a search algorithm.

The amount of information stored in databases is exploding. From zillions of point-of-sale transactions and credit card purchases to pixel-by-pixel images of galaxies, databases are now measured in gigabytes and terabytes. In today’s fiercely competitive business environment, companies need to rapidly turn those terabytes of raw data into significant insights to guide their marketing, investment, and management strategies.

It would take many lifetimes for an analyst to pore over 2 million books — the equivalent of a terabyte — to glean important trends. But analysts have to. For instance, Wal-Mart, the chain of over 2000 retail stores, every day uploads 20 million point-of-sale transactions to an AT&T massively parallel system with 483 processors running a centralized database. At corporate headquarters, they want to know trends down to the last Q-Tip.

Luckily, computer techniques are now being developed to assist analysts in their work. Data mining (DM), or knowledge discovery, is the computer-assisted process of digging through and analyzing enormous sets of data and then extracting the meaning of the data nuggets. DM is being used both to describe past trends and to predict future trends.

Mining and Refining Data

Experts involved in significant DM efforts agree that the DM process must begin with the business problem. Since DM is really providing a platform or workbench for the analyst, understanding the job of the analyst logically comes first. Once the DM system developer understands the analyst’s job, the next step is to understand those data sources that the analyst uses and the experience and knowledge the analyst brings to the evaluation.

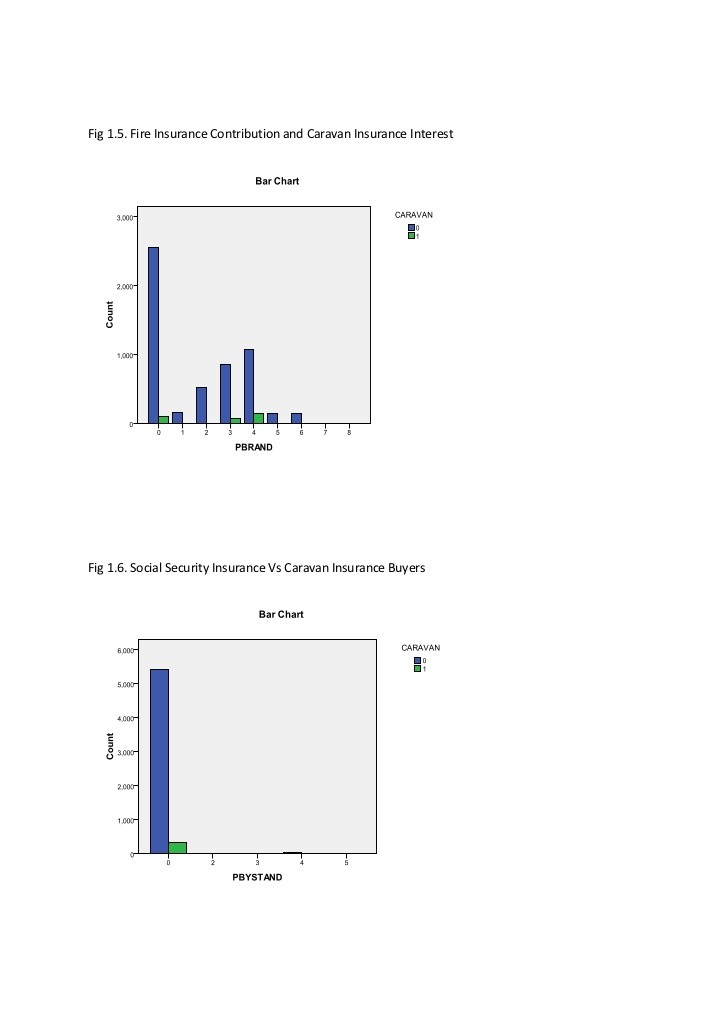

The DM process generally starts with collecting and cleaning information, then storing it, typically in some type of data warehouse or datamart (see figure below). But in some of the more advanced DM work, such as that at AT&T Bell Labs, advanced knowledge-representation tools can logically describe the contents of databases themselves, then use this mapping as a meta-layer to the data. Data sources are typically flat files of point-of-sale transactions and databases of all flavors. There are experiments underway in mining other data sources, such as IBM’s project in Paris to analyze text straight off the newswires.

THE DATA MINING PROCESS

DM tools search for patterns in data. This search can be performed automatically by the system (a bottom-up dredging of raw facts to discover connections) or interactively with the analyst asking questions (a top-down search to test hypotheses). A range of computer tools — such as neural networks, rule-based systems, case-based reasoning, machine learning, and statistical programs — either alone or in combination can be applied to a problem.

Typically with DM, the search process is iterative, so that as analysts review the output, they form a new set of questions to refine the search or elaborate on some aspect of the findings. Once the iterative search process is complete, the data-mining system generates report findings. It is then the job of humans to interpret the results of the mining process and to take action based on those findings.

AT&T, A.C. Nielsen, and American Express are among the growing ranks of companies implementing DM techniques for sales and marketing. These systems are crunching through terabytes of point-of-sale data to aid analysts in understanding consumer behavior and promotional strategies. Why? To increase profitability, of course.

Similarly, financial analysts are plowing through vast sets of financial records, data feeds, and other information sources in order to make investment decisions. Health-care organizations are examining medical records in order to understand trends of the past; they hope this information can help reduce their costs in the future. Major corporations such as General Motors, GTE, Lockheed, Microsoft, and IBM all have R&D groups working on proprietary advanced DM techniques and applications.

Siftware

Hardware and software vendors are extolling the DM capabilities of their products — whether they have true DM capabilities or not. This hype cloud is creating much confusion about data mining. In reality, data mining is the process of sifting through vast amounts of information in order to extract meaning and discover new knowledge.

It sounds simple, but the task of data mining has quickly overwhelmed traditional query-and-report methods of data analysis, creating the need for new tools to analyze databases and data warehouses intelligently. The products now offered for DM range from on-line analytical processing (OLAP) tools, such as Essbase (Arbor Software ) and DSS Agent (MicroStrategy), to DM tools that include some AI techniques, such as IDIS (Information DIscovery System, from IntelligenceWare) and the Database Mining Workstation (HNC Software), to the new vertically targeted advanced DM tools, such as those from AT&T Global Information Solutions.

Many people argue that the OLAP tools are not true mining tools; they’re fancy query tools, they say. Since these programs perform sophisticated data access and analysis by rolling up numbers along multiple dimensions, some analysts still include them in the category of top-down mining tools. The market has yet to see much in the way of more-advanced mining tools, although the spigot is being turned on by application-specific DM tools from AT&T, Lockheed, and GTE.

One major DM trend is the move toward powerful application-specific mining tools. There is a trade-off in the generality of data-mining tools and ease of use, observes Gregory Piatetsky-Shapiro, principal investigator of the Knowledge Discovery in Databases Project at GTE Laboratories. General tools are good for those who know how to use them, but they really require lots of knowledge to use them.

AT&T, for example, recently introduced Sales & Marketing Solution Packs to mine data warehouses. They’re tailored to vertical markets in retail, financial, communications, consumer-goods manufacturing, transportation, and government. These programs provide about 70 percent of the solution, with final tailoring required to fit the individual client’s needs, AT&T says. Complete with AT&T parallel hardware, software, and some services, Solution Packs start at around $250,000.

Both GTE and Lockheed Martin may shortly follow suit. GTE is already entertaining proposals to turn its Health-KEFIR (KEy FIndings Reporter) into a commercial product. The Artificial Intelligence Research group at Lockheed Martin has been investigating and developing DM tools for the past 10 years. Recently, the Lockheed group built an internal application-development tool, called Recon, that generalizes their DM techniques, then applied it to application-specific problems. A beta version of the first vertical packages — for finance and marketing — will be available in 1996. The system has an open architecture, running on Unix platforms and massively parallel supercomputers. It interfaces with existing relational database management systems, financial databases, proprietary databases, data feeds, spreadsheets, and ASCII files.

In a similar vein, several neural network tools have been customized. Customer Insight Co. for instance, has built an interface to link its Analytix marketing software with HNC Software’s neural network-based Database Mining Workstation, creating a marketing DM hybrid. HNC Software’s Falcon detects credit-card fraud; according to HNC, the program is watching millions of charge accounts.

Invasion of the Data Snatchers

The need for DM tools is growing as fast as data stores swell. More-sophisticated DM products are beginning to appear that perform bottom-up as well as top-down mining. The day is probably not too far off when intelligent agent technology will be harnessed for the mining of vast public on-line sources, traversing the Internet, searching for information, and presenting it to the human user. Microelectronics and Computer Technology Corp. (MCC, Austin, TX) has been pioneering work in this area, developing a platform, called Carnot, for its consortium members. Carnot-based agents have been successfully applied to both top-down and bottom-up DM of distributed heterogeneous databases at Eastman Chemical.

Data mining is evolving from answering questions about what has happened and why it happened, observes Mark Ahrens, director of custom software sales at A.C. Nielsen. The next generation of DM is focusing on answering the question `How can I fix it?’ and making very specific recommendations. That’s our focus now — our Holy Grail. Meanwhile, the gold rush is on.

Data mining is the search for relationships and global patterns that exist in large databases, but are `hidden’ among the vast amounts of data, such as a relationship between patient data and their medical diagnosis. These relationships represent valuable knowledge about the database and objects in the database and, if the database is a faithful mirror, of the real world registered by the database. One of the main problems for data mining is that the number of possible relationships is very large, thus prohibiting the search for the correct ones by simple validating each of them. Hence, we need intelligent search strategies, as taken from the area of machine learning. Another important problem is that information in data objects is often corrupted or missing. Hence, statistical techniques should be applied to estimate the reliability of the discovered relationships.

TOOLS AND TECHNIQUES

Data Visualization

Data visualization software is one of the most versatile tools for data mining exploration. It enables you to visually interpret complex patterns in multidimensional data. By viewing data summarized in multiple graphical forms and dimensions, you can uncover trends and spot outliers intuitively and immediately.

In the data mining process, visualization tools help you explore data before modeling—and verify the results of other data mining techniques. Visualization tools are particularly useful for detecting patterns found in only small areas of the overall data.