Life Analytics Decision Tree Interpretation

Post on: 16 Март, 2015 No Comment

Decision Tree Interpretation

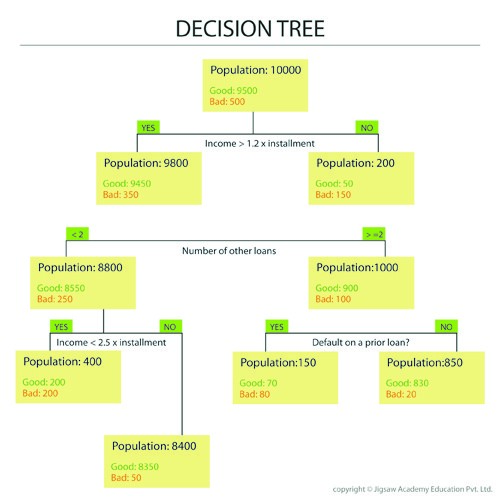

On the previous post i went through some basic steps required for predicting the price changes of a specific stock of the Greek stock exchange market. As a result of this process, the following decision tree was generated :

To interpret a decision tree, the analyst starts from the root of the tree and reads through it until a leaf node is reached. For example a rule that can be extracted from the decision tree above is the following:

IF aseStockExchange > 0.360 AND aseStockExchange > 1.985 THEN price>+2

The rule above can be found by starting from the root of the tree, moving on the left branch and then continuing to the right sub-branch. In the same way an analyst is able to find the rest of the rules identified by the decision tree.

When using decision tree learners or rule extractors, analysts record the precision and recall of a rule which are not shown in the decision tree above. However, for matters of simplicity i will omit this information and describe the insights provided from the analysis. Decision Trees possess the two following qualities :

1) They provide easy model interpretation

and

2) They show us the relevant importance of the variables

When confronted with many variables, analysts usually start by building a decision tree and then using the variables which the decision tree algorithm has selected with other methods that suffer from the complexity of many variables, such as neural networks. However, decision trees perform worse when the problem at hand is not linearly separable. For the purpose of our example though, a decision tree ‘explains’ the behavior of the stock nicely.

It should be noted that during the Feature Selection analysis of our stock example we have found that features ‘aseStockExchange’ and ‘DAX’ are important. Other features such as ‘xaaPersonalHouseProducts’ were flagged as important from the Feature Selection algorithm and were not used in the decision tree. Different feature selection methods produce different results (and one might say that this is not very assuring) but usually most methods produce a common feature subset that is of high predictive value.

The importance of the attributes can be seen from the level that they appear on the decision tree (the higher the level, the better is the prediction power of the attribute). So in our example, the ‘aseStockExchange’ feature is the most important (since it is the attribute with which the decision tree starts) and less important attributes seem to be ‘xaaLeisure’ and ‘xaaBenefit’.