Practical Guile

Post on: 16 Март, 2015 No Comment

I got to know of Dr Tucker Balch s MOOC (Computational Investing Part 1 ) from Lin Xinshan. This is a my personal review of the recently concluded 4th offering of the course.

This is the first MOOC that Ive actually completed (out of the many that Ive enrolled on a whim). I think its a combination of:

- My strong interest in the subject matter

- The excellent pacing of the course materials and assignments

- The timely release of the course textbook: What Hedge Funds Really Do

The course consists of video lectures, graded assignments and a forum on Piazza. The video lectures mostly mirror the content of the textbook and I found the videos rather tedious actually.

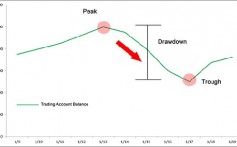

The textbook does a good job of providing a palatable introduction to quantitative portfolio management. It includes the rationale behind constructing a portfolio with CAPM and the various measures of evaluating its performance (e.g against risk free Treasuries or a broad market index like the S&P 500). Each chapter also includes a short but interesting biography of prominent traders who were able to achieve alpha (market beating returns).

I would have liked the book to discuss the quantitative aspects of measuring portfolio performance in more detail though:

- It mentions the Sharpe (risk as both upward/downward deviations in returns) and Sortino (considers downside deviations only) ratios but focuses on the former for the entire book. Why would I use the Sharpe ratio instead of the Sortino ratio when Im primarily interested in downside risk?

- There is a chapter dedicated to Event Studies which measure the effects of events on equity prices for a period of days before and after the event. The authors suggest testing out candidate events in a simulator and backtesting it for fitness. I take issue with the method as described in the book as it is ripe for curve fitting. I expected at least referencing methods on mitigating the risk of overfitting event rules to past data such as segregating data into In Sample and Out Of Sample data and performing Walk Forward testing on the OOS data.

- To be fair, the authors do caution against data mining fallacies in another chapter and hints at the segregation of In Sample and Out Of Sample data for use in backtesting and validation respectively but this point is simply not made obvious enough for my liking.

- Lastly, I would have appreciated a list of resources curated by the authors where readers can obtain deeper discussion of the main points covered by the book.

The real gem for me though is the QuantSoftware Toolkit (QSTK). The QSTK allows anyone to quickly get to grips with the tasks of analysing and visualising portfolio performance (much like how Rails provides a quickstart for web development). I especially like the fact that It provides the components of SPX adjusted for survivorship bias.

This library is written in Python and makes extensive use of Pandas, NumPy and Matplotlib. It was an enjoyable experience picking up Python and exploring the Pandas/NumPy/Matplotlib APIs. I can safely say that Ive gained a newfound appreciation for how I can use these powerful libraries to supplement my own quantitative analysis.

I would highly recommend this course to anyone who wishes to get a good introduction on constructing and evaluating asset portfolios with quantitative methods.

Ive decided to relearn the C language after years of using higher level languages like Ruby and Java because I viewed it as a necessary step to the greater understanding of the computing stack comprising software and processing hardware (CPU & GPU).

I would even state that gaining a expert understanding of C provides greater value to writing high performance software by maximising the local performance of code on multiple CPU cores, than using ever more complex libraries/platforms that distribute work to multiple computers across network links. That being said, distributed computing remains very much applicable to tasks that cannot fit within the memory and storage constraints of a single computer.

I tried reading The C Programming Language but it was hard for me to get into it because the book contained a lot of preliminary material for introducing programming to beginners. The tone of instruction was also rather dry and was suited for a reference manual.

Next I looked at Learn C The Hard Way from Zed Shaw. I like it for the fact that it introduces Valgrind very early, as well as the practical bent to the exercises. However I preferred something that provided a more polished learning experience in terms on overall structure as well as a focus on the use of memory pointers.

Pointers on C by Kenneth A. Reek turned up on ACCU.org searches for the appropriate books and the various positive reviews were sufficient to warrant the purchase of a used copy from Amazon. Do yourself a favour and pick up used copies instead of brand new as the new books are absurdly pricey.

Pointers on C is structured into 18 chapters, excluding an appendix containing answers to selected questions at the end of each chapter.

Chapter 1: A Quick Start

Starts off by giving a glimpse of the big (programming) picture by introducing a simple C program that reads text from the standard input, modifies it and writes it to the standard output. The chapter then explains in detail the lexical rules and constructs used in the source code.

I prefer this approach to starting with lexical and syntactical rules as the reader gets to see and run a program that does something more useful than printing Hello world.

Chapter 2: Basic Concepts

Briefly describes the translation environment, in which source code is converted into executable machine instructions; and the execution environment, in which the code actually runs. Gives an overview of how source files are preprocesed, compiled and linked.

Explains lexical rules pertaining to the C language, such as permitted characters, comment structure and identifiers.

Chapter 3: Data

Introduces the integer, floating point and enumerated types, along with details on their characteristics and properties. Clarifies the differences between block scope, file scope, prototype scope and function scope leading to how the compiler resolves linking to identifiers.

Additionally, the chapter provides a lucid explanation of how variable values are stored according its storage class. There are 3 possible places to store variables: ordinary memory (heap), on the runtime stack, and in hardware registers. Each type of storage class contains different characteristics in terms of variable initialisation.

The author clearly explains how the storage classes for variables can be affected through scope, as well as the use of the static and register keywords.

Chapter 4: Statements

Fairly standard treatment of the control flow and looping statements available in C.

Chapter 5: Operators and Expressions

Describes the various operators in C that support bitwise shifting, arithmetic, relational and logical operations. The author also provides good advice when working around the lack of a boolean type in C. The difference and interchangeability between L-values and R-values are clarified:

- A L-value is something that can appear on the left side of an equal sign; an R-value is something that can appear on the right side of an equal sign, e.g a = b + 25

- A L-value denotes a place while a R-value denotes a value.

- A L-value can be used anywhere a R-value is needed but a R-value cannot be used when a L-value is needed.

There is also a very useful operator precedence table for reference.

Chapter 6: Pointers

A very well written chapter going into details of what C pointers are. This is the best explanation of memory pointers I have seen so far.

The author explains the topics of pointers, pointers to pointers, indirection and L-values superbly in a visual style that manages to incorporate pointer arithmetic and operator precedence into the mix. This is just the start of the focus on pointers as the rest of the book makes extensive of pointers.

Chapter 7: Functions

Syntactical explanation of function prototypes and explains how to implement variable argument functions. There is a note that states the compiler assumes an integer type is returned if the function prototype doesnt define a return type.

Highlights the passing-by-value semantics for function arguments.The interesting part of this behaviour is that it is consistent with non-scalar values such as structs or arrays. This is due to the fact that name of the array/struct are really pointers to the first element/member.

Discusses the memory usage, computational tradeoffs between recursive and iterative functions with examples calculating factorials and Fibonacci numbers, making a compelling case for favouring iterative algorithms over recursive ones.

Chapter 8: Arrays

Expands the discussion from the previous chapter on the concept of array names containing pointer constants (address of the first element of the array) as values. Highlights the differences between pointers and subscripts in the context of arrays.

Discusses how pointers may be more efficient than subscripts by analysing the generated assembler code, concluding that optimising the assembler code should be taken only as an extreme measure. Im inclined to agree.

Illustrates details on the storage order of arrays which includes memory layouts for multi-dimensional arrays.

Chapter 9: Strings, Characters, and Bytes

Clarifies the definition of a C string (a sequence of zero or more characters followed by a NUL byte ); works through the usage of both restricted and unrestricted string functions.

Briefly describes character functions for classification and transformation, in addition to demonstrating functions for working with arbitrary sequences of bytes in memory.

Chapter 10: Structures and Unions

Describes syntax for declaring structures and accessing members. Offers an approach to defining self referential structures, and the use of incomplete declarations for declaring mutually dependent structures.

Another easy to understand visual explanation of accessing structures and structure members with pointers, including how structures are stored in memory and the implications with architectures that require boundary alignment (e.g machines with 4 byte integers and must begin at addresses evenly divisible by 4)

Discussion of Bit Fields, Unions and Variant Records with applicability and tradeoffs in terms of maintenance and memory usage.

Chapter 11: Dynamic Memory Allocation

Explains how programs can obtain and manipulate chunks of memory with malloc. free. calloc. realloc. Cautions against common errors with dynamic memory allocation and memory leaks.

Chapter 12: Using Structures and Pointers

Here the author walks the reader through the design and implementation of a linked list. The author implements the abstract data structure as a singly linked list first, then modifies it into a doubly linked list.

As is already evident from the book so far, the author is fantastic at explaining his thoughts in a straightforward manner during the design and implementation process.

Chapter 13: Advanced Pointer Topics

Introduces the concept of multiple levels of indirection that pointers can have and explains in detail advanced declarations of pointer variables in gradual levels of complexity. Mentions cdecl. a *nix utility for converting between C declarations and English. Try this on for size: int (* (*f)())[10]

Explains pointers to functions and how they can be used for callback functions and jump tables; processing command line arguments with examples.

The author includes an interesting way to perform pointer arithmetic and indirection with string literals. Even better, there is an example code snippet that makes use of this approach to simplify the logic of performing character to integer conversion, which opened my mind to say the least.

Chapter 14: The Preprocessor

Describes the predefined symbols and directives provided by the preprocessor as well as defining macros.The author helpfully points out the possible issues with operator precedence and evaluation due to the fact that the preprocessor merely performs substitution of the macros text into the instances.

Hint:

The precedence order of an expression with an occurrence of a macro that evaluates expressions may change, post macro substitution.

Chapter 15: Input/Output Functions

In-depth treatment to working with text and binary streams using stdio.h library functions. Introduces the FILE structure which represents an input/output stream being used by the program.

The chapter explains how to work with character, line and binary I/O, as well as provides an exhaustive table of format codes used with the printf and scanf family of functions.

Chapter 16: Standard Library

This chapter contains explanations and examples of the following categories of functions from the standard library:

- Arithmetic

- Random Numbers

- Trigonometry

- Hyperbolic

- Logarithm and Exponent

- Floating Point Representation

- Power

- Floor, Ceiling, Absolute Value and Remainder

- String Conversion

- Processor Time

- Time of Day

- Date and Time Conversions

- Nonlocal Jumps

- Processing Signals

- Printing Variable Argument Lists

- Terminating Execution

- Assertions

- The Environment

- Executing System Commands

- Sorting and Searching

- Numeric and Monetary Formatting

- Strings and Locales

The more notable parts of this chapter were:

Nonlocal Jumps

The functions in setjmp.h provides a mechanism similar to goto but not limited in scope to one function and are commonly used with deeply nested chains of function calls. If an error is detected in a lower-level function, its possible to return immediately to the top-level function without having to return an error flag to each intermediate function in the chain.

Processing Signals

The types of work available in signal handlers are limited. If the signal is asynchronous (triggered external to the program), no library functions should be called other than signal because their results are undefined in this context.

Furthermore, the handler may not access static data except to assign a value to a static variable of type volatile sig_atomic_t . To be truly safe, about all that a signal handler can do is set one of these variables and return. The rest of the program must periodically examine the variable to see if a signal has occurred.

Chapter 17: Classic Abstract Data Types

This chapter is similar to chapter 12, except the focus is on designing and implementing the stack, queue and binary tree abstract data types. The author quickly introduces each data structure and moves into design and implementation of the ADT.

Chapter 18: Runtime Environment

The final chapter deals with examining the assembly language code produced by the authors specific compiler for his specific computer in order to learn several interesting things about the runtime environment. The author generates assembly code from compiling a C source file to determine:

- how a static variable is initialised,

- the maximum number of register variables available for use by programs,

- the maximum length of external identifier names,

- the function calling/returning protocol (i.e Application Binary Interface),

- the stack frame layout

This chapter is highly technical and will take multiple readings to digest, and apply to other architectures and compilers. That said, it may be easier to just look up the schematics/ABI for your particular CPU online.

I enjoyed reading this book and working through the exercises after each chapter. The side notes the author included for comparisons between K&R C and ANSI C were somewhat interesting from a historical standpoint. Much more useful are the cautions and tips throughout the book that provide insights into the use of C, that would otherwise be discovered only through hard-earned experience.